You're an expert at... using Claude's Workbench

Introduction

“You are an expert in” is a well known prompting ritual, and a standard piece of advice - but is it effective? Recent research describes it as “not working”.

At the same time, the Anthropic API documentation says that “With a role, Claude catches critical issues that could cost millions”… so it’s a potentially high stakes question.

Role Prompting Tip from Anthropic API Docs

This is a good excuse for us to try out the new Anthropic Developer Console that lets us set up Prompts and Evaulations for testing. So, let’s delve right in.

Testing

1. Review the API documentation

There are a couple of examples of Role Prompting supplied in the API Documentation: Legal contract analysis and Financial analysis. The examples demonstrate a strong difference in outputs.

We’ll use the Financial analysis examples for our test.

Financial Analysis Examples

Without role prompt:

| Role | Content |

|---|---|

| User | Analyze this dataset of our Q2 financials: {{FINANCIALS}} Highlight key trends and recommend actions. |

| Assistant | Here’s a summary of your Q2 financials: 1. Revenue: $15.2M, up 22% YoY. Enterprise client base grew 30%. 2. Gross Margin: 72%, up 3% from Q1. 3. EBITDA Margin: 18%, down 2% due to R&D investments. 4. Cash Flow: $4.1M operating, $28M in reserves. Overall, financials look strong with revenue growth and healthy margins. Increased R&D spending is impacting profitability but could pay off long-term. Maintain current course. |

With role prompt:

| Role | Content |

|---|---|

| User | You are the CFO of a high-growth B2B SaaS company. We’re in a board meeting discussing our Q2 financials: {{FINANCIALS}} Analyze key trends, flag concerns, and recommend strategic actions. Our investors want aggressive growth but are wary of our burn rate. |

| Assistant | Board members, here’s my analysis of our Q2 financials: 1. Revenue ($15.2M, +22% YoY): - Insight: Enterprise growth (+30%) is outpacing overall growth. - Flag: SMB segment declined 5%. - Action: Reallocate 20% of SMB marketing budget to enterprise sales. Task product to simplify SMB onboarding. 2. Margins (Gross: 72%, +3% QoQ; EBITDA: 18%, -2% QoQ): - Insight: Infrastructure optimizations boosted gross margins. - Flag: R&D spend on AI features dented EBITDA. - Action: No change. Our AI roadmap is critical for enterprise stickiness. 3. Cash ($4.1M OCF, $28M reserves): - Insight: 15-month runway at current burn. - Flag: CAC up 20%, eroding LTV/CAC. - Action: Freeze non-essential hires. Implement sales funnel analytics to cut CAC by 15% in Q3. Strategic Stance: We’re threading the needle between growth and burn. Enterprise focus is our growth engine—invest there. But watch SMB churn; it could bleed into enterprise. Our AI bet is sound, but we must convert it to ARR by Q4, or we cut R&D by 30%. In sum: Double down on enterprise, streamline SMB, optimize sales, and monetize AI. Questions? |

2. Setup and Run a Test

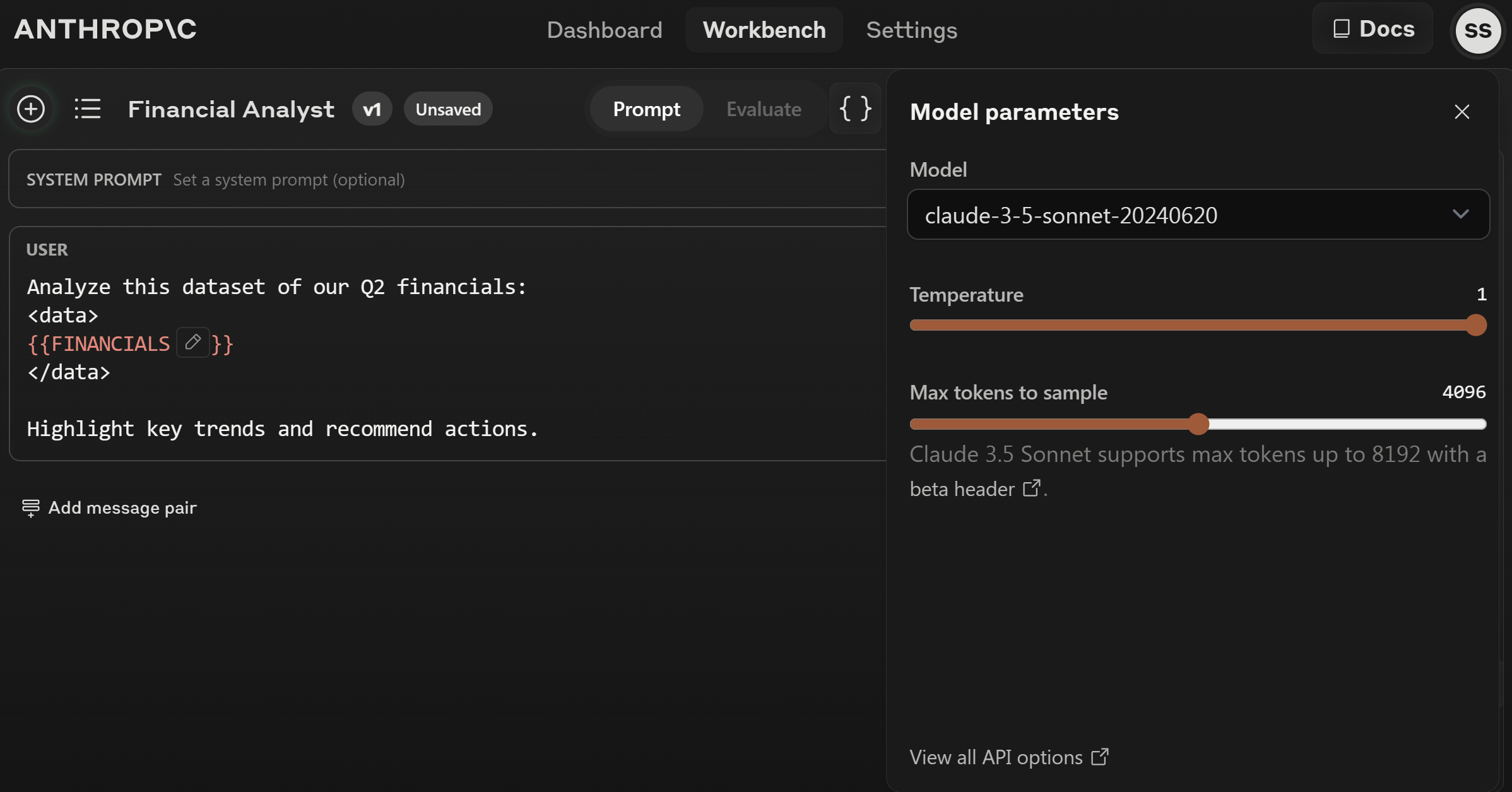

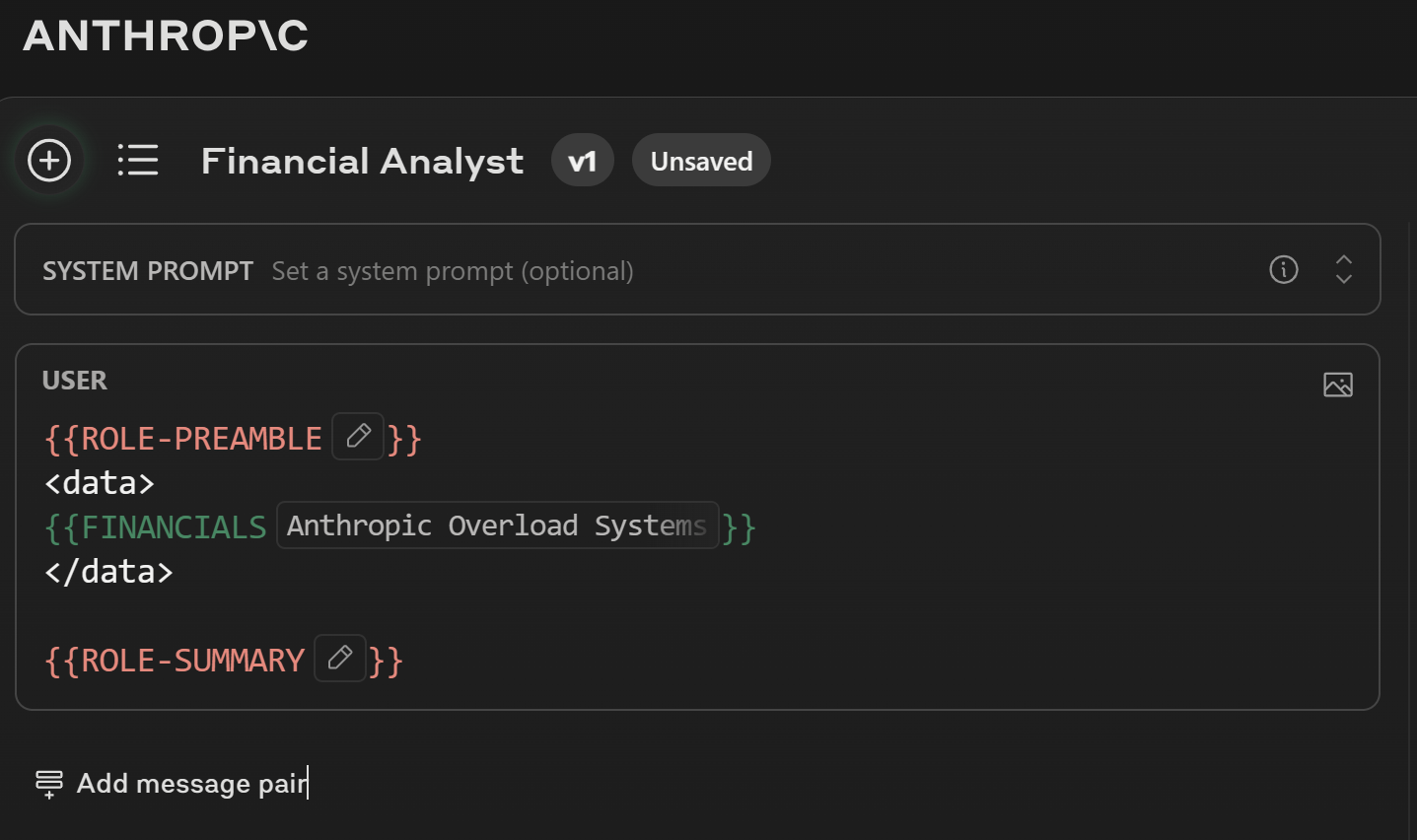

Lets put the Financial analysis prompt straight in to the new Workbench (Anthropic Console account required).

Anthropic Prompt Console

We’ll use Sonnet 3.5, and set the Temperature to the API Default of 1. Although the documentation instructs us to supply the role as the “System” parameter, the examples show “User” interactions, so that’s how we’ll set it up. The System Prompt is left blank.

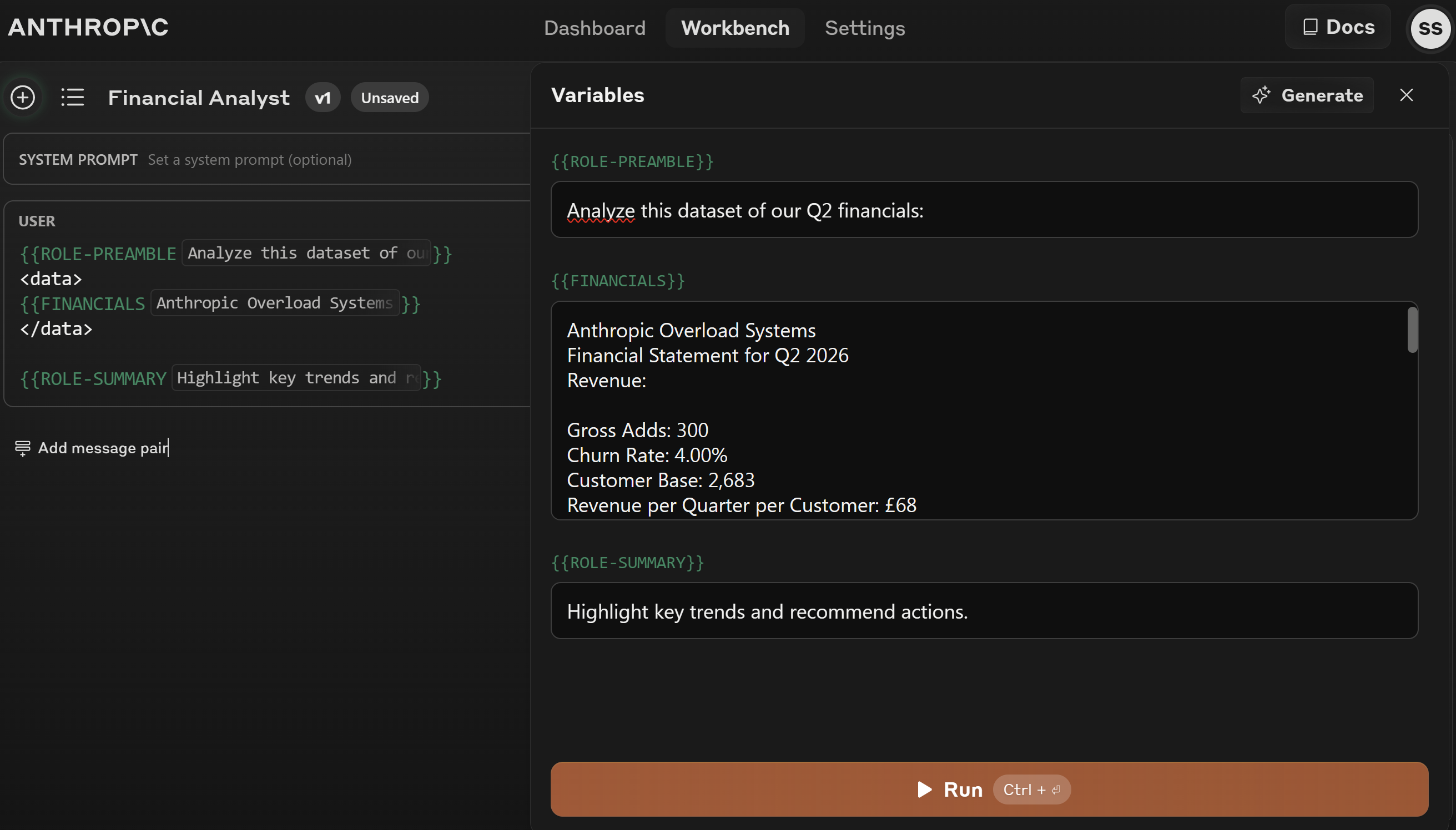

We don’t have access to the “dataset” referenced in the examples, but fortunately one of the scenarios in our Advanced Product Management course is for a SaaS company.

We’ll use ChatGPT’s data analysis tools to produce a financial statement for Claude to analyse.

Data for Analysis

Anthropic Overload Systems Financial Statement for Q2 2026 Revenue:

Gross Adds: 300 Churn Rate: 4.00% Customer Base: 2,683 Revenue per Quarter per Customer: £68 Total Revenue: £181,103 Expenses:

Sales and Marketing: £62,500 Customer Support: £26,830 Operations, Hosting, and Maintenance: Base Cost: £51,051 Uplift (5%): £2,553 Total: £53,604 Research and Development: £137,500 Total Costs:

Total Expenses: £280,434 Profit/Loss:

Total Revenue: £181,103 Total Costs: £280,434 Net Profit (Loss): -£99,331 Key Financial Metrics:

Average Monthly Profit per User (AMPU): -£37.02 Cost per Acquisition (CArC): £208 Summary For Q2 2026, Anthropic Overload Systems generated a total revenue of £181,103. Despite steady revenue, the company incurred significant expenses, primarily due to research and development costs and operational expenses. The total costs for the quarter amounted to £280,434, resulting in a net loss of £99,331. The company’s financial performance highlights a need to review and potentially optimize operational efficiencies and cost management strategies to improve profitability.

Now that we’ve got some financial data, we enter it in to the {{FINANCIALS}} variable and run the prompt. The “Evaluate” option is then enabled:

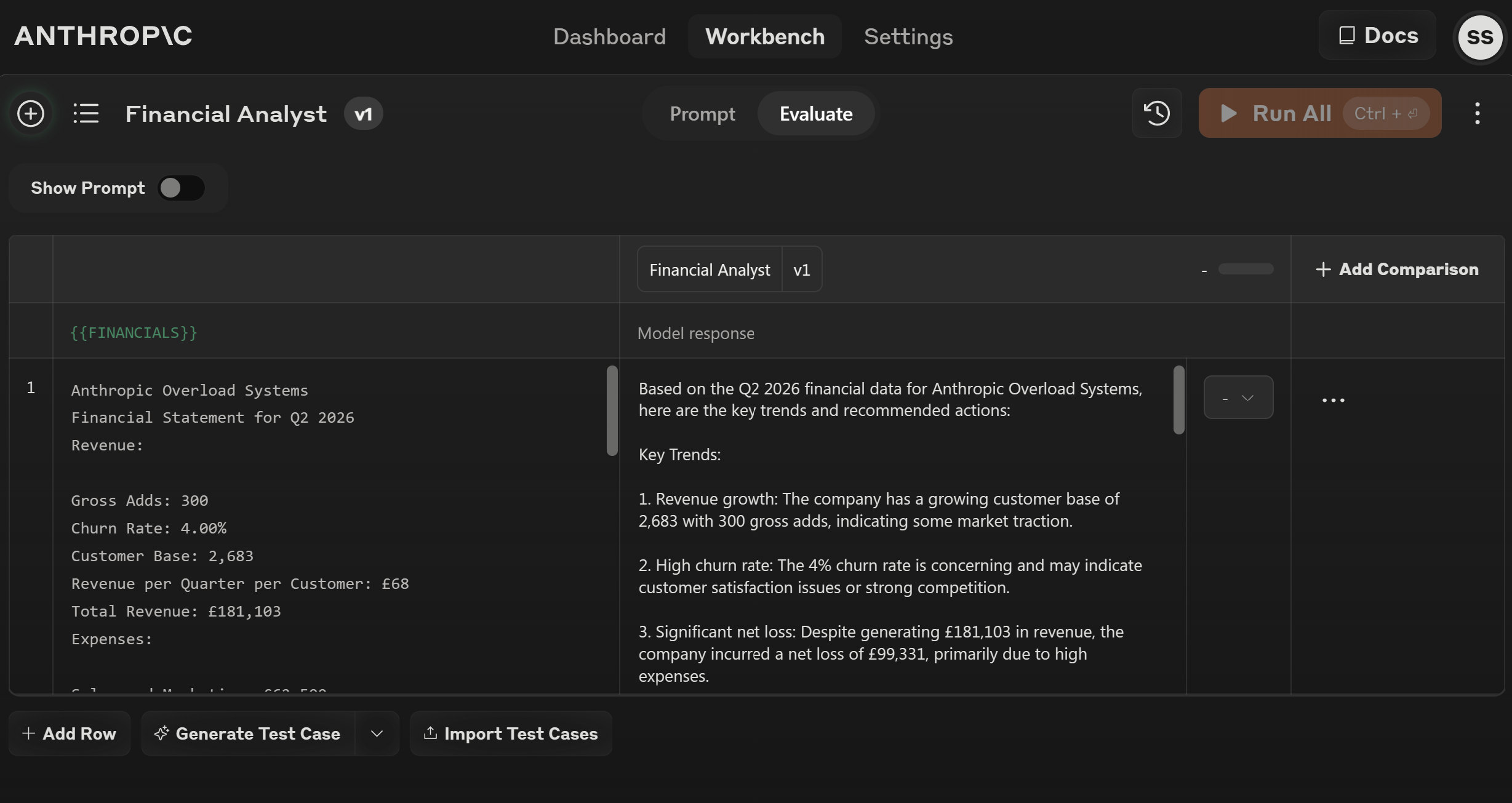

Anthropic Consle - Eval Section

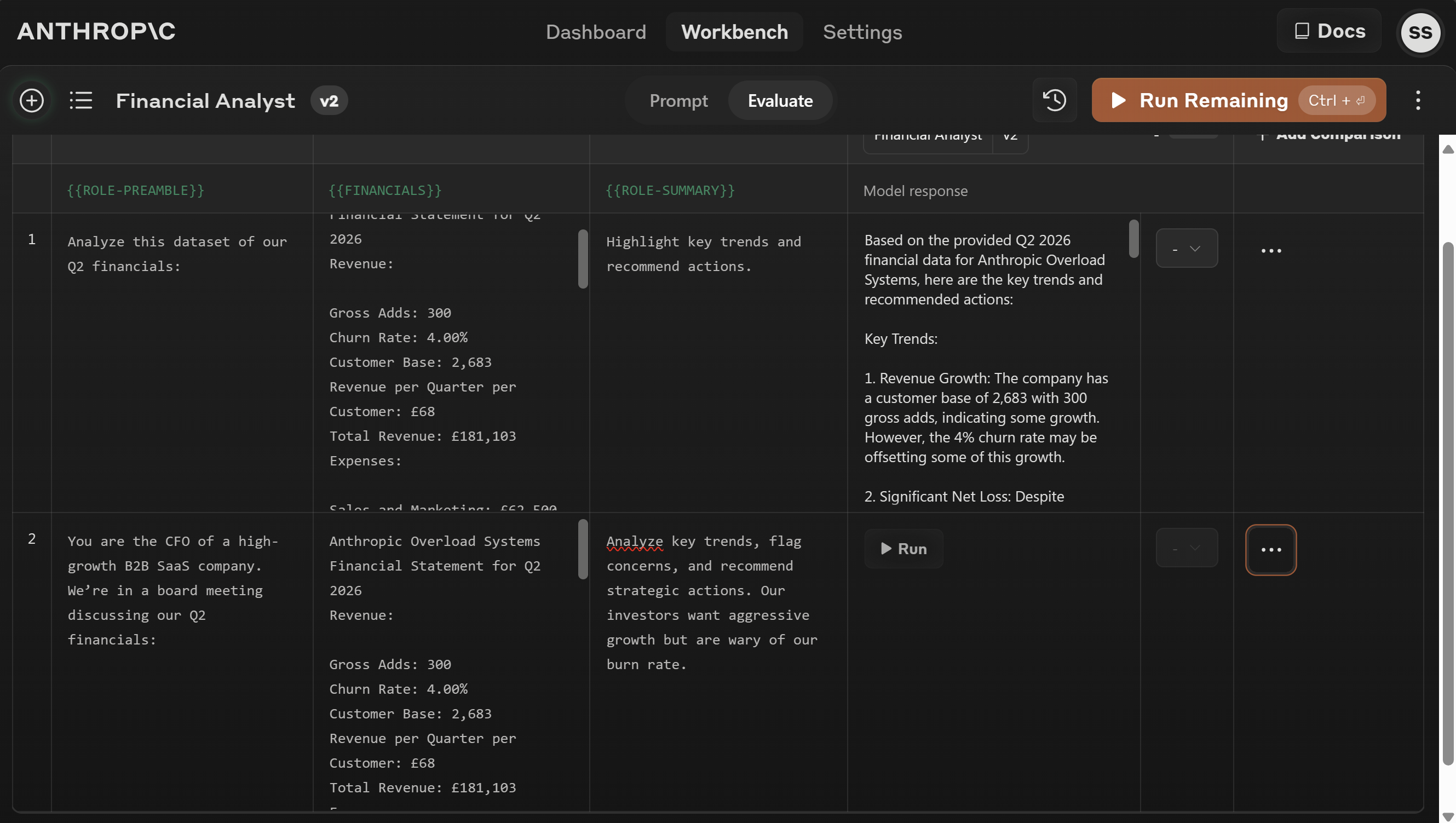

In this view, we have the {{FINANCIALS}} variable in the first column, with the response from Sonnet in the Second column. Tests are arranged in rows - each row can have different variables so we can compare outputs based on different scenarios.

There are a couple of cool features here. The “Generate Test Case” button populates the new row with a new financial scenario. In this case, it’s a company called “GreenGrow Agritech Solutions” who are in a much better financial position than “Anthropic Overload Systems”. It didnt’ just copy the format directly either, the new sample includes COGS and Debt-to-Equity ratios which the original financial analysis didn’t. There’s also a bulk import function if we already have data we want to load and try.

We can also manually rate the quality of each response:

Generated Data and Response Rating

3. Adjust the Roles

Of course, it’s actually the instructions in the prompt we want to change - so we’ll need to adjust our prompt template to make the Preamble and Summarisation parts of the prompt variables too:

Prompt for Testing

{{ROLE-PREAMBLE}}

<data>

{{FINANCIALS}}

</data>

{{ROLE-SUMMARY}}

Updated Prompt

Variables that haven’t been supplied yet are indicated in Red in the Prompt Tool.

We’ll supply the sections from the “Without Role” Financial analysis example prompt, and run the test:

Updated Prompt

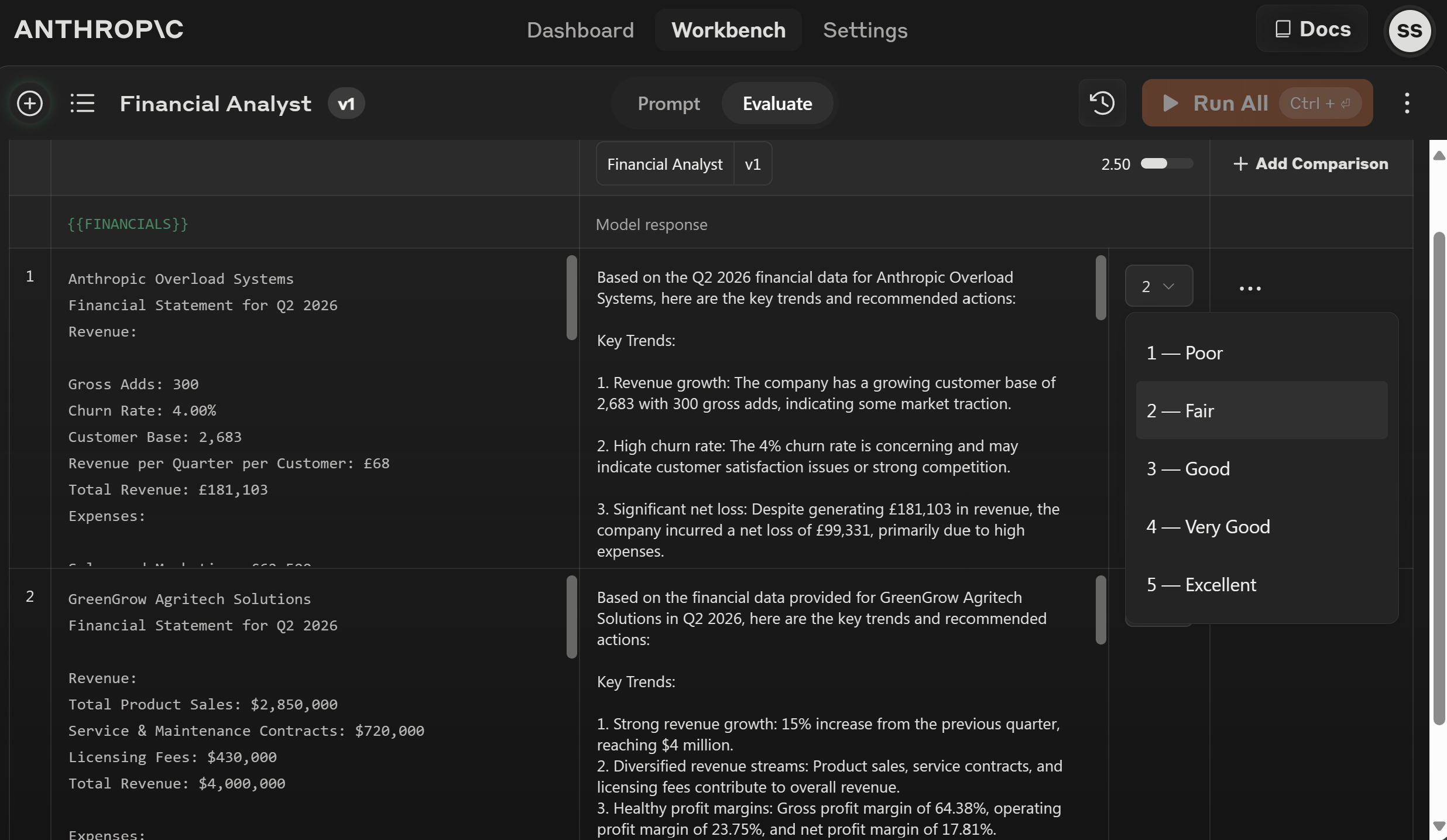

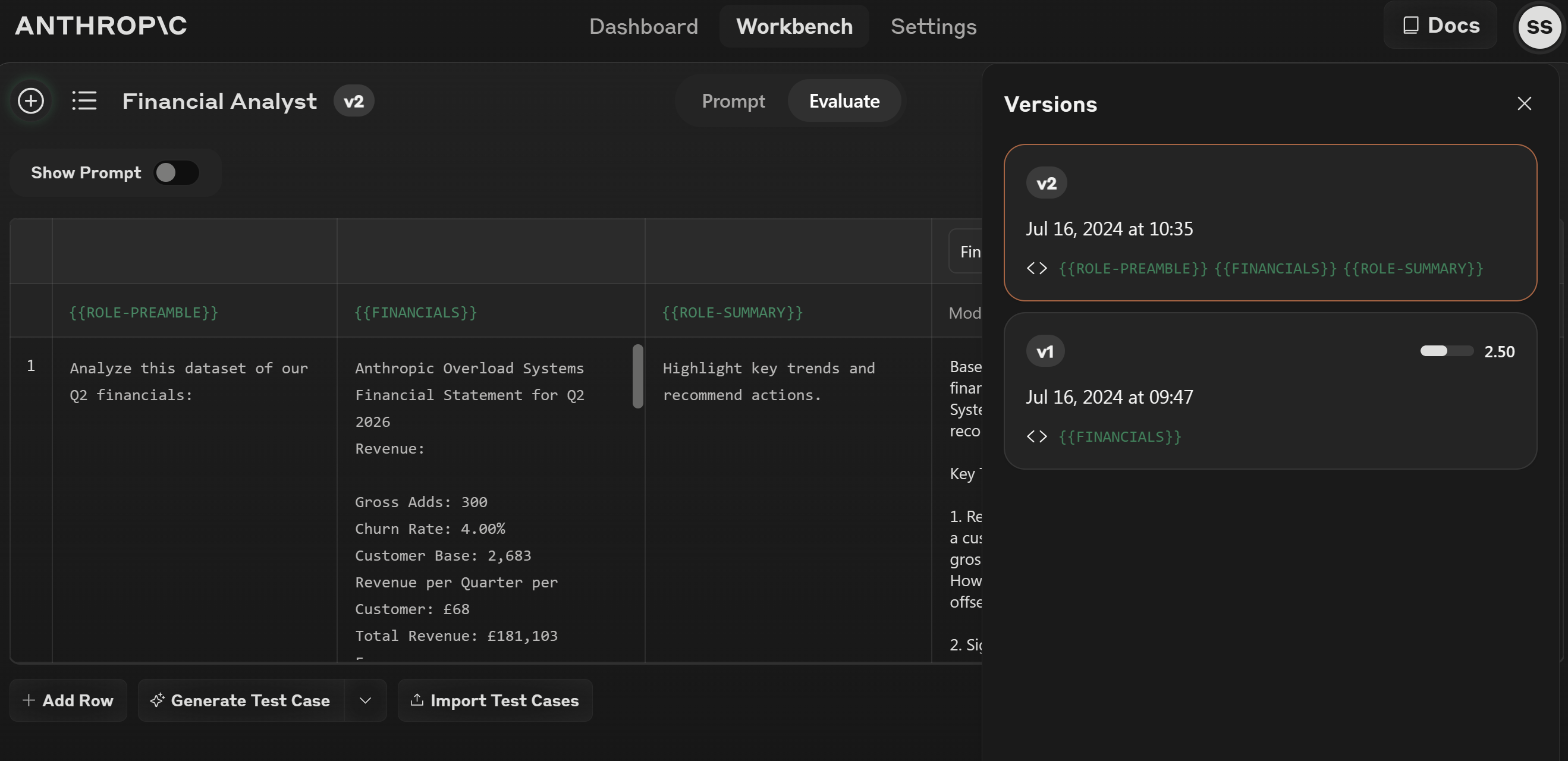

Going to the results (evals) table, we can see that the Console has applied a version number to our prompt. Next to “v1” is an average of the ratings we had given the outputs. It’s also stored the result of our “previous Without role” test run.

Results with Prompt Versioning

We can now enter a new row, and supply the variables to use the “Financial analysis with role prompting” preamble and summary from the API example.

Running both scenarios

That’s produced 2 outputs, but which is better?

4. Assess the Outputs

By eye, I prefer the second one.

But I’d prefer not to do manual analysis, so let’s produce a scoring rubric.

Rubric Creation Prompt

You will be presented with the results of an analysis of company financial data.

Produce a comprehensive, critical and specific rubric for assessing the task performance of the analyst who produced the report, and the quality of suggested actions.

For example:

1 Point : No Actions or Trends are recommended.

3 Points : Actions or Trends are identified, but lack reasoning and explanation.

5 Points : Actions or Trends are presented with reasoned insights that demonstrate understanding.

In our initial “Financial Analyst” prompts, there were directions to produce Actions and identify Trends in both examples, so I’ve given that as a hint.

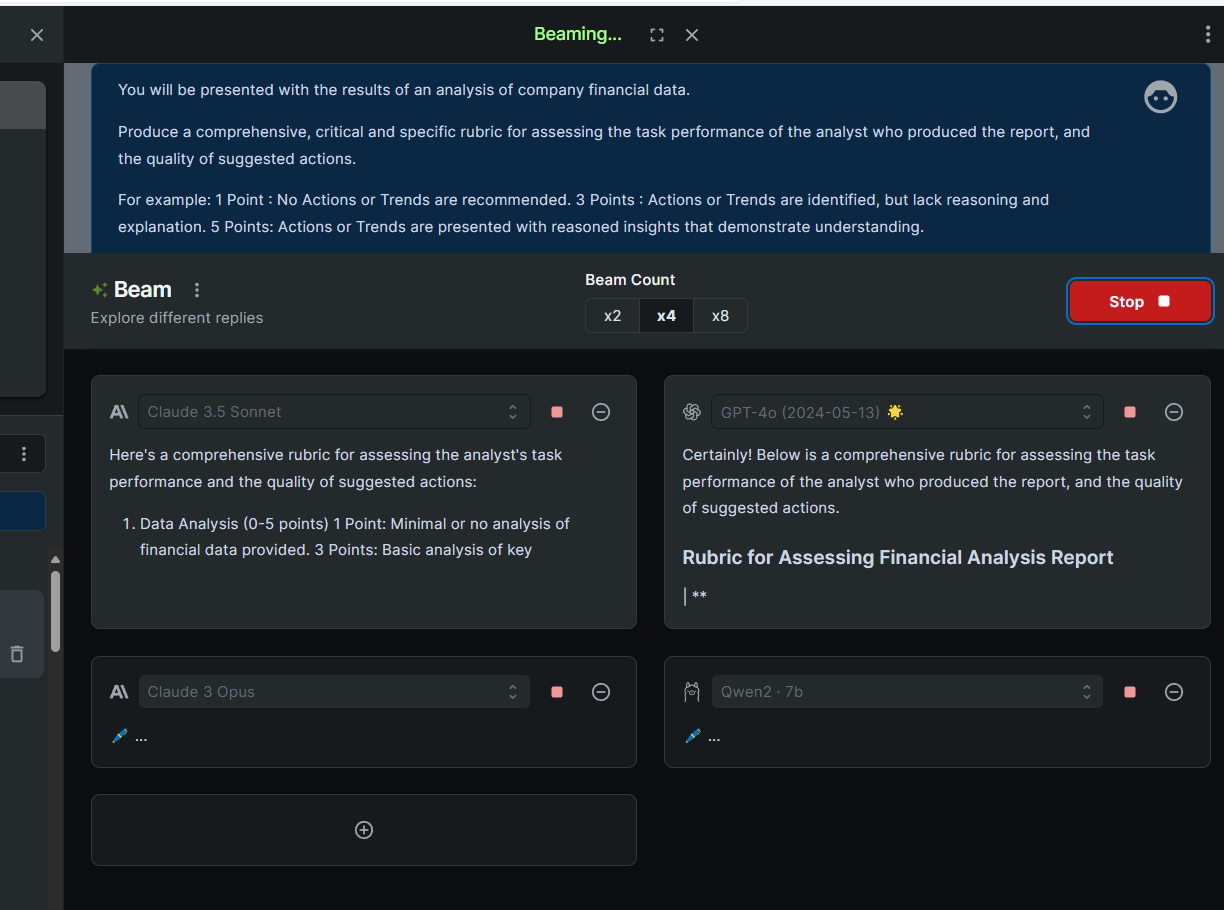

To get as many ideas and perspective as possible, we’ll use Big-AGI to combine the answers from 4 different models (Sonnet 3.5, GPT-4o, Opus 3 and Qwen2-7b).

Big-AGI BEAMing

The ensembling produces us the following scoring rubric. The parts in bold I’ve added in preparation for the next step - running the scoring.

Scoring Rubric

Your role is to use the below rubric to score the quality of Financial Reports:

Comprehensive Financial Analysis Rubric (100 points total)

Data Interpretation and Analysis (20 points) 1 point: Minimal interpretation of data; mostly raw numbers presented without context 10 points: Adequate interpretation of most key financial metrics with some context provided 20 points: Exceptional interpretation of data, including nuanced understanding of interrelationships between different financial metrics

Trend Identification and Reasoning (20 points) 1 point: No trends identified or incorrectly analyzed 10 points: Key trends identified with basic supporting data and some explanation 20 points: Comprehensive trend analysis, including short-term and long-term trends, with thorough explanation, data support, and reasoning behind each trend

Actionable Recommendations (20 points) 1 point: No recommendations provided or vague, generalized suggestions 10 points: Several relevant actions recommended with basic justification 20 points: Comprehensive set of strategic and tactical recommendations, prioritized and explained with detailed justification and potential impact analysis

Risk Assessment and Mitigation Strategies (10 points) 1 point: No mention of potential risks or downsides 5 points: Key risks identified with some basic mitigation strategies suggested 10 points: Comprehensive risk analysis including sensitivity analysis, scenario planning, and innovative risk mitigation approaches

Financial Acumen and Industry Context (10 points) 1 point: Limited understanding of basic financial concepts; no industry context 5 points: Good understanding of financial principles with some industry context provided 10 points: Exceptional financial expertise evident, including sophisticated financial modeling and insightful use of advanced metrics, integrated with thorough industry analysis

Data Visualization and Presentation (10 points) 1 point: Poor or no use of charts, graphs, or tables; data presented in a confusing manner 5 points: Adequate use of charts and graphs that generally support the analysis 10 points: Exceptional data visualization that significantly aids comprehension, including interactive or innovative presentation methods

Clarity, Organization, and Communication (10 points) 1 point: Report is disorganized and difficult to follow with poor communication skills 5 points: Report is somewhat organized with adequate communication skills 10 points: Well-organized report with excellent communication skills, clear and concise language, and a logical flow from one section to another

Scoring Guide: 90-100: Outstanding analysis and recommendations 80-89: Very good analysis with strong recommendations 70-79: Good analysis with some areas for improvement 60-69: Adequate analysis but significant room for enhancement Below 60: Needs substantial improvement

When supplied with an analysis, explain your scoring approach and provide an objective score.

I’m sure that can be refined a bit further, but it’ll do for now. We’ll run it a few times to see if it gives consistent results.

Let’s score the outputs to see if there is a winner…

5. Score the Outputs

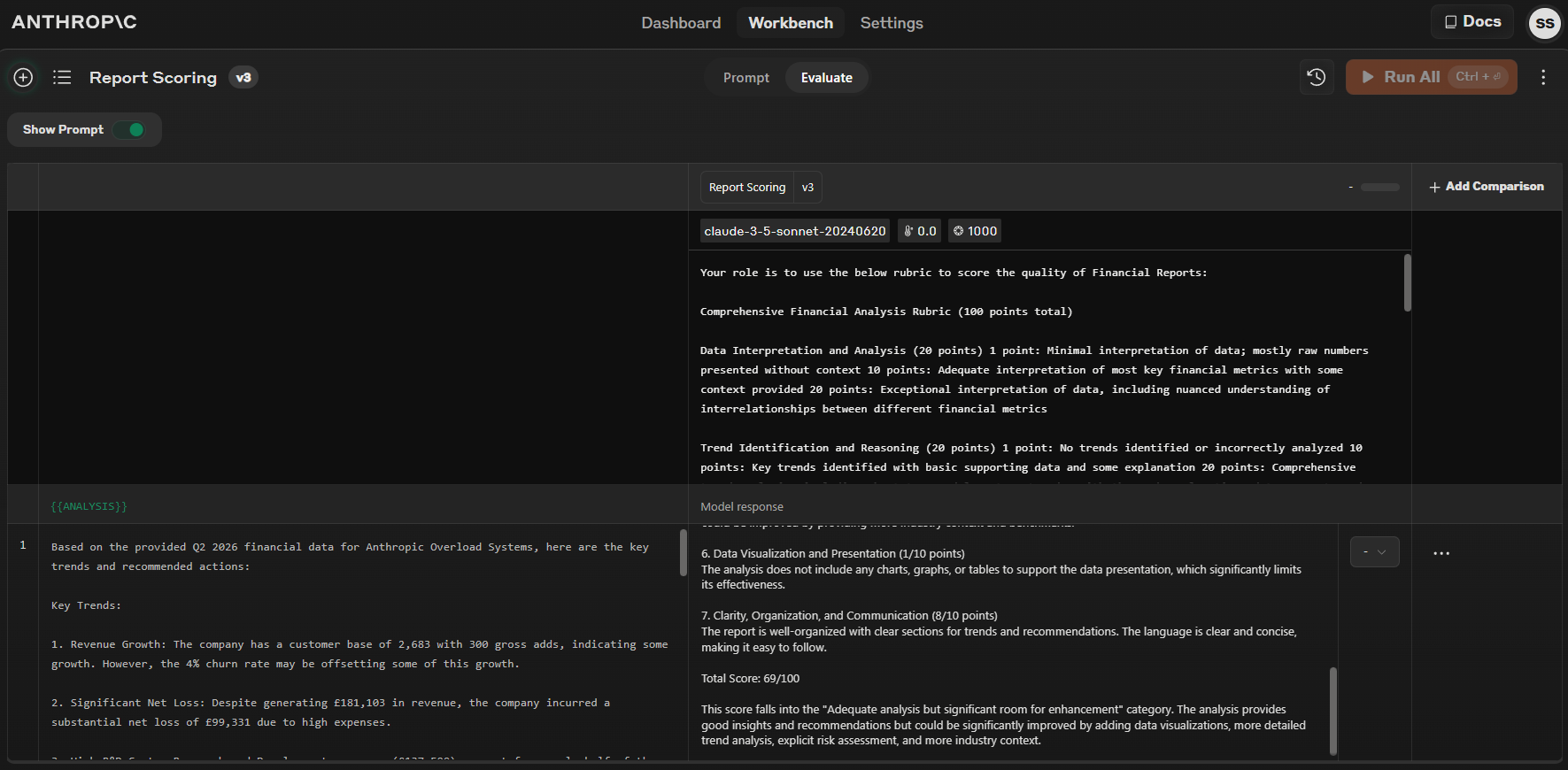

I don’t fancy pasting all this in to Claude.ai, so we’ll set the scoring prompt up in the Workbench as well.

I’ll use the above “Scoring Rubric” prompt as the System Prompt, and add a User Prompt: Score this analysis {{ANALYSIS}}. That makes it easy to run the scoring a few times, see how stable the scores are, and what differences there are between the two scenarios.

This will also give us a fresh context each time, ensuring that previous scoring and outputs won’t influence future ones.

Let’s start by scoring the “Without a role” outputs:

Scoring the ‘Without Role’ outputs

We’ll run it three times, at both Temperature 0 (Console Default) and Temperature 1 (API Default). A very nice touch in the Anthropic Workbench is that it treats changing the temperature setting as a new version of the prompt.

| Without Role | Temp 0 | Temp 1 |

|---|---|---|

| Run 1 | 69 | 70 |

| Run 2 | 69 | 73 |

| Run 3 | 69 | 71 |

The rubric is stable at producing scores for the input, and the temperature adjustment doesn’t cause too much fluctuation either.

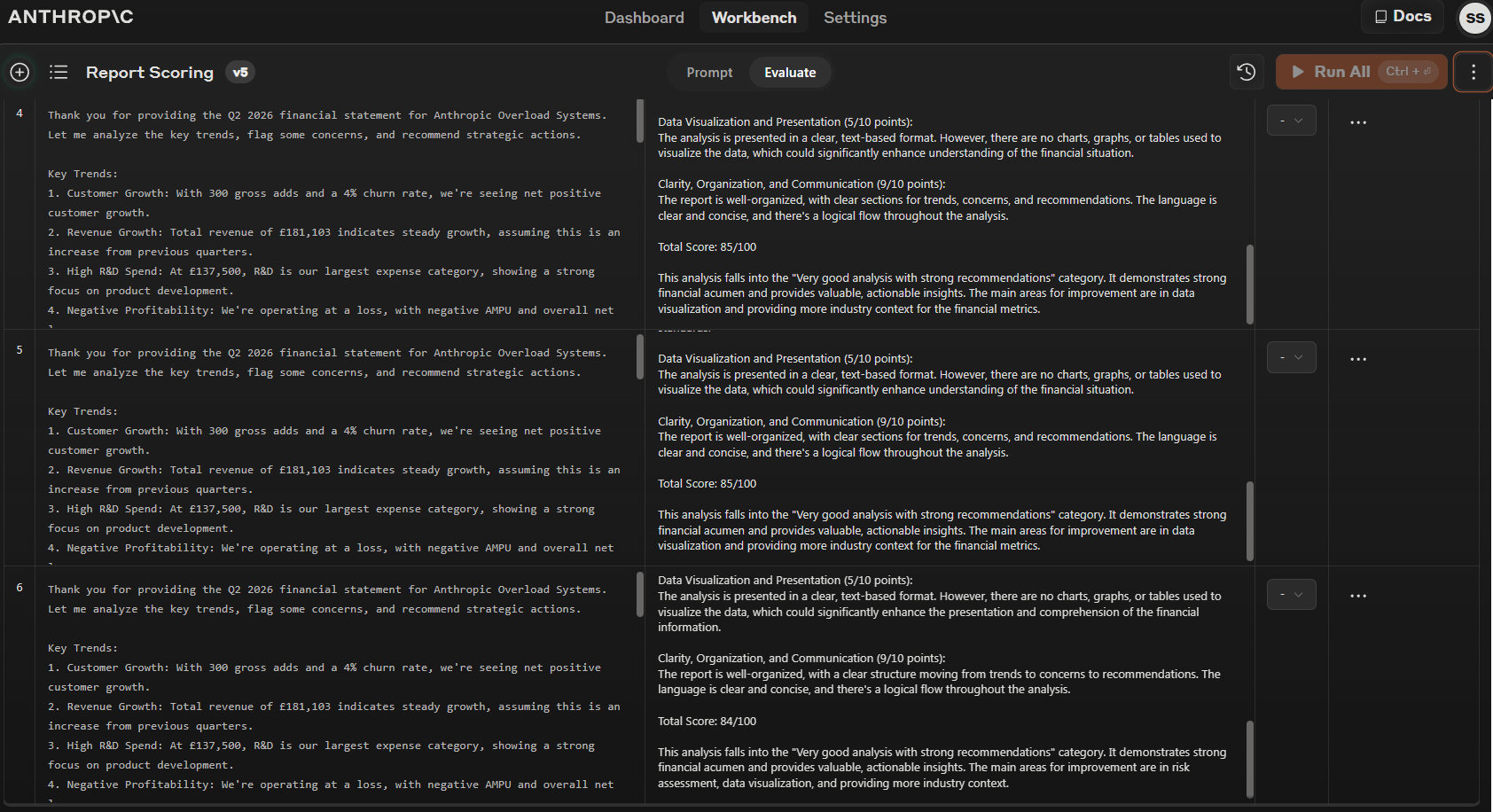

Let’s now score the outputs produced by the “With a role” prompt:

‘With a role’ Scores

| With a Role | Temp 0 | Temp 1 |

|---|---|---|

| Run 1 | 85 | 86 |

| Run 2 | 85 | 79 |

| Run 3 | 84 | 86 |

Again, the rubric gives us stable scores, and it’s sensitive to the input it’s assessing, which is a good sign.

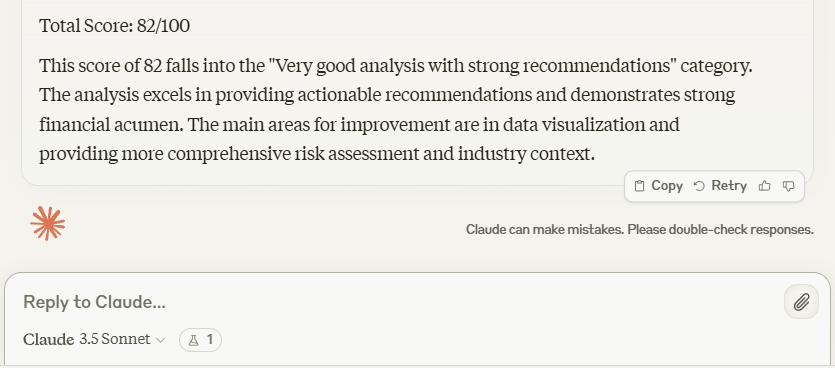

OK - maybe we will paste it in to Claude.ai after all as a quick cross-check (82/100):

‘With a role’ by Claude.ai

Taking the mean of those 6 evaluations, “Without a role” scored 70.1, “With a role” scored 84.1.

The Rubric creation prompt was careful not to match the initial prompts - there are no words in the Rubric creation prompt that aren’t in both the Example prompts, so the rubric shouldn’t be primed to fit one or the other outputs unfairly.

Against our generated rubric, “With a role” wins.

Conclusion

Anthropic Console Workbench

The Anthropic Workbench was fun to work with, and made the tests easy to set up. The inbuilt versioning, test data generation, and ability to store test results to avoid token-sapping re-runs is welcome.

Anything which moves the field of using LLMs from ritual to empiricism is a good thing, and this is an accessible starting point available to everyone with an Anthropic Developer account. Of course, this is another potential source of lock-in for Anthropic customers, but that’s a discussion for a different day.

There are 2 features missing that will improve the utility of the workbench, and surely they are coming soon:

- Model Grading of Outputs: The ability to grade outputs by supplying a snippet of code or rubric would be very convenient.

- Crowdsourced User Grading: The ability to crowdsource qualitative scoring over last large Test Runs would also be very helpful.

Role Prompting (or Scenario Building) - Overrated?

Is it time to declare the end of Role Prompting? For straightforward prompts and queries, I think this has been redundant against newer models (since GPT-4). The example used in this article was conducting a fairly complex open-ended task, but specifying a role did improve performance. The risk here is that for this type of task, evaluating the difference over a few runs by eye is hard, and the results “Without role” already look good. Incremental imrpovements from there are not going to be immediately obvious - and I fear ritualistically avoiding Role Prompting “because it doesn’t work” could end up being harmful.

The example here did more than just change the Role in the prompt though: it incorporated a scenario. The LLM is always predicting the next token ahead based on the entire context, so I think of it like constructing a novel. The scenario of the board meeting and wary investors describes a scene - that the LLM then duly narrates. If the scenario was changed to “You’re a CFO relaxing on the beach with a cocktail”, we’d probably get a different quality of analysis. Getting the context right so the LLM can author the correct scene is perhaps a more useful model than having it pretend it’s a person. I hypothesize that we could change or add roles to the original prompt, and it wouldn’t change the scores much, if at all. However, we have previously demonstrated that Claude models are sensitive to the presence of even a simple “You are a helpful AI Assistant” System Prompt.

These are all worthy experiments worth trying as we continue to increase the value received from LLMs.