Chat Interface Roundup (Jul 24)

Introduction

The end of 2022 marked the release of ChatGPT to consumers, offering the first conversational interface to a powerful Generative AI platform. The simplicity of natural language chat and seemingly limitless capabilities of GPT led to the fastest growing user base ever recorded.

Chat interfaces remain popular and accessible, and continue to incorporate features that expand the utility of Generative AI, such as Document Retrieval, agent-assisted Code Execution, and Web Search.

This roundup provides an overview of some popular chat front-ends. For this review, we will compare the paid plans for Anthropic (Claude) and OpenAI (ChatGPT), and then look at four popular Open Source projects to explore innovative features and compare their relative strengths.

The great news is that all the offerings mentioned here are of high quality, each with distinct advantages. The challenge lies in selecting the best tool and prioritizing the available features based on your specific needs.

Overall Summary

Most Power Users and Workgroups will be best served by choosing one or more Open Source front-ends for their chats, selecting tools based on their specific needs.

This does require effort and technical skill: dealing with API Keys, monitoring metered usage, and considering deployment concerns. Big-AGI is notable as it offers an incredibly rich experience with very little overhead.

For ease of use, of the two major providers’ consumer front-ends, Anthropic offers the preferred model with Sonnet 3.5. The Artifacts feature is unique and well-polished, lowering the barrier for interactive creativity and prototyping.

For enterprise teams, the Cloud Storage Integration and Data Analysis features in ChatGPT may well provide the edge in a number of production scenarios.

Features We Love

Before examining the front-ends, here are some features that we find significantly impact the chat User Experience.

| Feature | Description |

|---|---|

| Chat History Search | Locate past conversations quickly through keyword search. |

| Chat Cost Calculation | See the cost of each chat turn / context window usage. |

| Mermaid/SVG Diagrams | Built-in Mermaid.js diagrams and SVG support for content visualization. |

| Conversation Branching | Explore alternative paths within a conversation and manage context |

| Message Editing | In-place editing of Input Prompts and Output Content. |

| Custom GPTs | Define task-specific chat instructions and settings. |

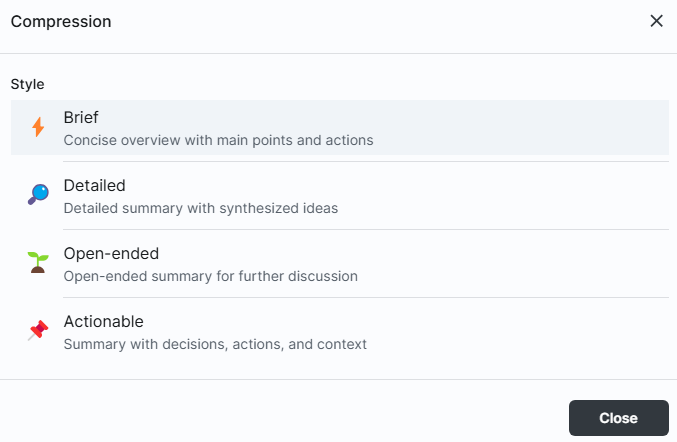

| Chat Compression | Summarize long chats into new, coherent sessions. |

| RAG Document Features | Search and efficiently include relevant document parts in chats. |

For each product, we have annotated these features:

- Feature Present

- Feature Partially Implemented

- Feature Absent

ChatGPT and Claude

At time of writing, both the ChatGPT Plus plan and Claude Professional are priced at $20/month. Both are rate-limited, with ChatGPT being advertised as 80 messages per 3 hours. Claude is based on Input/Output token usage with an estimate of 45 messages per 5 hours for short messages, contingent upon message length and overall capacity.

Small enterprise teams and workgroups will find both products serve their needs well. Claude’s current rate limits, however, may present a challenge for moderate to heavy users. Users might encounter temporary pauses in service lasting a few hours as the rate limiter resets. While this can be a minor inconvenience, it’s worth considering when evaluating the tool for your team’s specific usage patterns and requirements.

ChatGPT’s Cloud Storage integration with Microsoft and Google, paired with the in–built Data Analysis functionality deliver a formidable productivity tool. GPT-4o is a capable, fast model and the ChatGPT User Experience is fast.

For teams collaborating and building content, Claude’s Projects feature is compelling, and the Google Sheets integration offered by Anthropic is useful for bulk data and lightweight business integration operations. Claude also includes searchable chat history, available by selecting “View all” from the recent chats display - a feature sorely missing from ChatGPT.

Anthropic Claude

Claude.ai

Anthropic launched the Claude.ai paid Professional and Team products in Europe in May 24, and have rapidly iterated since then, launching new improved models (Sonnet 3.5) as well as front-end features (Artifacts, Projects).

| At a glance | ||

|---|---|---|

| ▲ Claude 3.5 Sonnet Model | ▲ Projects and Artifacts Features | ▲ Claude 3.5 Sonnet Model |

| ▲ Effective System Prompt | ▲ Artifacts Share and Remix | ▲ Mermaid Support |

| ▼ Rate Limits impede use | ▼ Lagging UX in long chats | ▼ Limited Document Management |

| ▼ |

▼ No Web Search |

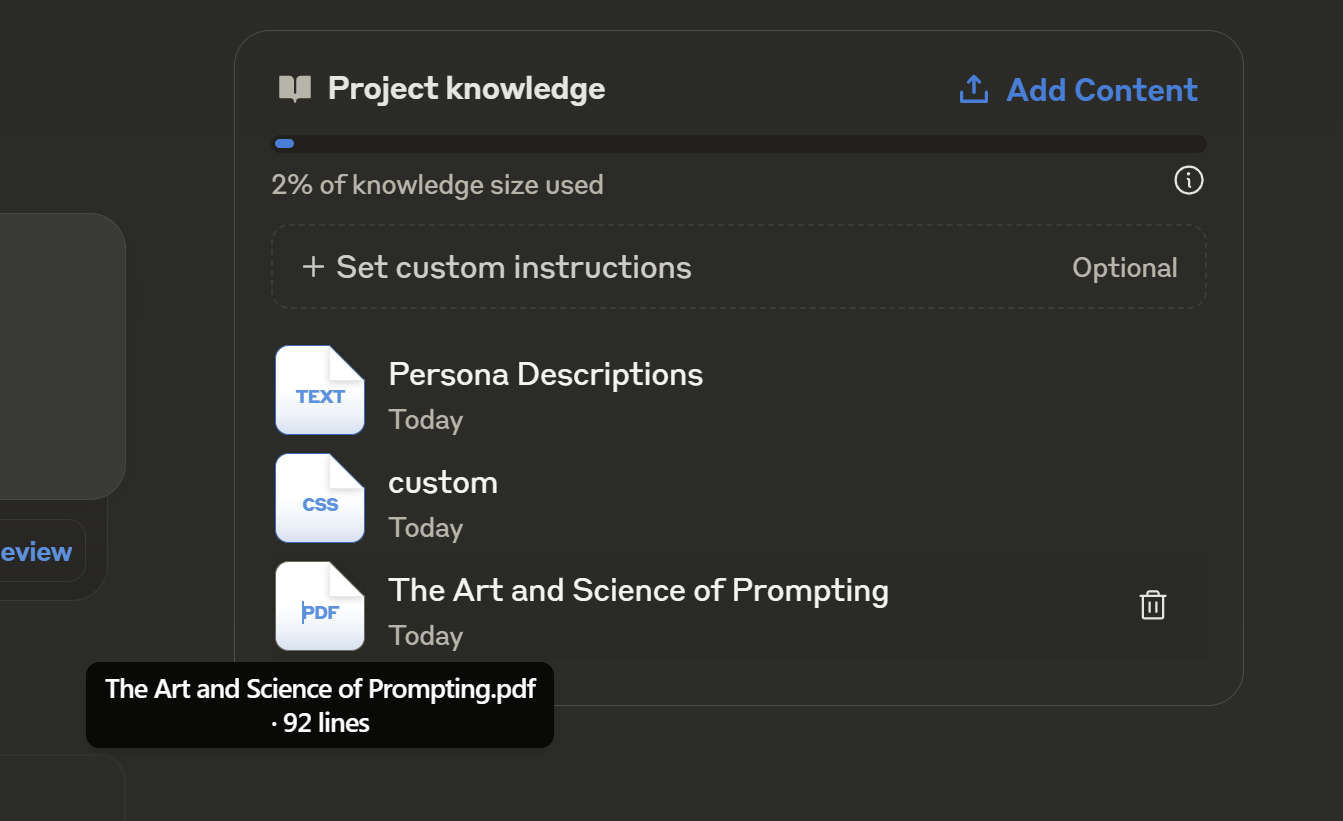

Claude Projects File Manager

The current model offerings perform well, and three recent models are avaiable (Haiku, Sonnet and Opus).

While Anthropic offers a compelling front-end experience, the current rate limits and heavy front-end make it challenging to recommend for critical work. It also lacks the ability to share chats, which can be a convenient way to show workings and collaborate with others.

The Projects feature is useful for both individuals and workgroups (see our initial impressions), but AnythingLLM offers a powerful alternative.

A special mention goes to the Claude for Sheets integration for Google Docs. This adds a CLAUDE("Prompt") function to Google Sheets, providing simple, immediate integration to large datasets and Sheets based workflows. Note - this feature requires using an API key, and is not accessible directly via the consumer interface.

Update: 16 Jul ‘24 - shortly after publication, Anthropic released the Android app so the table above has been corrected

OpenAI ChatGPT

ChatGPT

OpenAI’s front-end is fast and simple, balancing ease of use with powerful integrated features.

| At a glance | ||

|---|---|---|

| ▲ Powerful Custom GPTs | ▲ Cloud Storage Integration | ▲ Agent Code Writing/Execution |

| ▲ Data Analysis Features | ▲ Temporary Chat Mode | |

| ▼ Default Privacy Settings | ▼ Feature Discoverability |

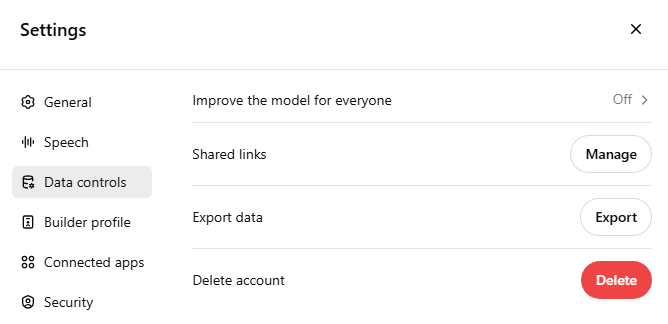

ChatGPT Privacy Setting

GPT-4o is available on ChatGPT’s free plan, with the paid Personal and Business plans offering further access to the GPT-3.5 and the GPT-4 Models as well as Dall-E for image generation. We recently looked at the performance of the Open AI Model Suite here.

For customers using the paid plan, the default setting to share data for training underscores a potential trust issue, as users may feel deceived by the need to manually opt-out to protect their data privacy. This aspect requires transparency and better communication to ensure users are fully informed about their data handling options.

ChatGPT Temporary Chat

During the GPT-4o release announcement, OpenAI teased some exciting multi-modal features, leading with a very low-latency voice interface. This has not yet been launched and remains keenly anticipated.

Open Source Web Front-Ends

The proliferation of open-source front-ends has democratized access to advanced LLM models and innovative User Experiences. The below products all have extensive model support, enabling fast switching between different models and providers, and offering the option of private Language Model hosting (either workstation or server-based).

Big-AGI (v1.6.3)

Big-AGI Big-AGI GitHub Big-AGI Live

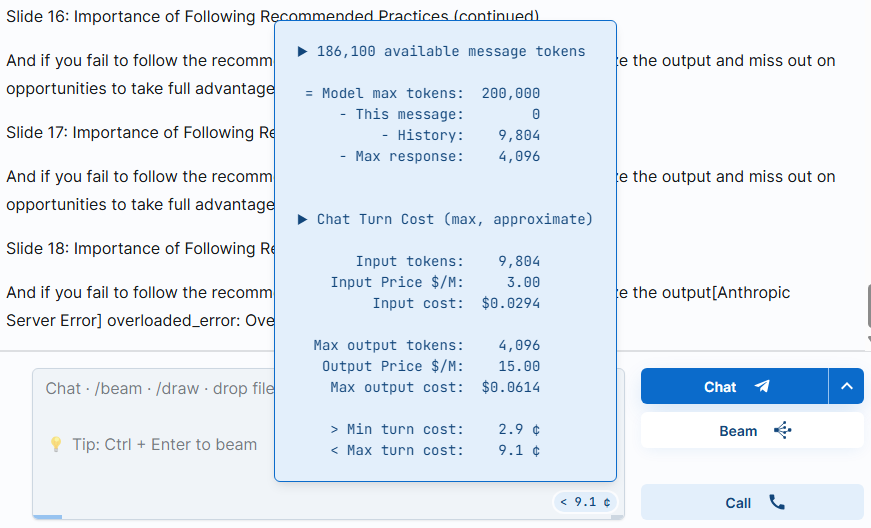

Big-AGI stands out for its comprehensive feature set and privacy-focused approach. It offers a hassle-free, private, and powerful experience for users seeking robust chat capabilities with minimal configuration. It is packed with practical features. The Chat Input area has a visual indicator showing how much context has been used, as well as a Chat Cost calculator.

| At a glance | ||

|---|---|---|

| ▲ Mid-chat Model Switching | ▲ Multi Model Ensembling | ▲ Chat Search |

| ▲ Privacy-Focused Design | ▲ SVG and Mermaid Support | ▲ Persona Builder |

| ▲ Chat Cost Estimation | ▲ Extensive Model Support | ▲ Good Chat Export |

| ▲ Condensable Chat Summaries | ▲ ReACT Prompting | |

| ▼ Limited Multiuser Applications | ▼ Assistant Setup | ▼ Limited Authentication |

Big-AGI Chat Cost Calculator

BEAM allows sending the same prompt to multiple models simultaneously, comparing and then aggregating a combined best-output. Ensembling is powerful, and gives visibly higher quality outputs for complex tasks.

Chat Compression is well thought-out, enabling a long-context chat to be summarised and used to start a fresh context

Big-AGI Chat Compression Dialogue

There is also a comprehensive Persona feature that given a text corpus or YouTube transcript will construct a custom prompt to simulate the author. This is well implemented, with the ability to view and customise the detailed process.

File Upload and URL Fetching are convenient and work well, although there are is no Document Store Integration or RAG features at the moment. Big-AGI is usable from the website, without needing to run or deploy anything (I personally use a local build). All chat data and configuration is stored locally in the Browser. For deployment, only Basic Authentication is supported. Big-AGI is a low hassle full-featured chat interface, optimised for individual Power Users - and fulfils that task well.

HuggingFace ChatUI (v0.9.1)

HF ChatUI HF ChatUI Github HuggingChat

ChatUI, powering HuggingChat, aims to make advanced open-source models accessible to everyone without proprietary constraints. It delivers an extremely fast user experience, similar to ChatGPT, and is built as an internet-facing service from the ground up.

| At a glance | ||

|---|---|---|

| ▲ Exceptional Model Support | ▲ Customisable Assistants | ▲ Highly Responsive UI |

| ▲ Sophisticated Web Search | ▲ Docker for Easy Deployment | ▲ Internet Scale Platform |

| ▼ Official Docs Lag Behind | ▼ Complex Configuration | ▼ No Mermaid/SVG Support |

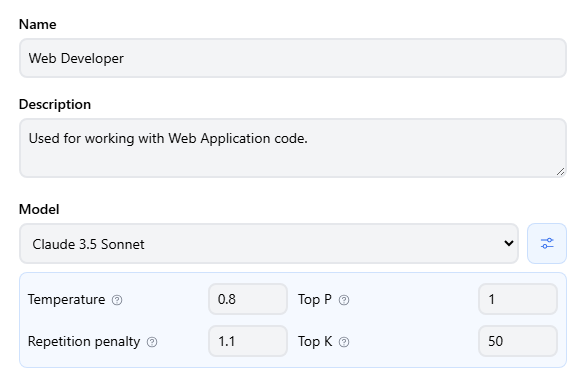

ChatUI provides excellent, diverse model support, including commercial endpoints for inference and embedding from OpenAI, Anthropic, Google, and AWS. Assistants are well-implemented, with a UX that excels at sharing and discovery.

HF ChatUI Assistant Settings

Creating task-based assistants is straightforward, with options for assistant internet access enabling Assistants that can answer from specific knowledge bases. Model parameters such as Temperature and Repetition Penalty can be tuned per-assistant, allowing optimization for creative vs. analytical tasks. For now, there are some aspects of this functionality that require manually updating the database (Featured Assistants, Admin User), but this is relatively straightforward.

Extendable tools functionality is under development, with current tested support limited to the Cohere Command R+ model.

The platform supports anonymous access and authenticated access via OIDC or third-party authenticators, including CloudFlare Access and Tailscale via trusted email headers. ChatUI extends to high-volume, multi-user scenarios, providing a ChatGPT-like experience. It provides the ability to export chat data (with user permissions) in Parquet format for easy analysis and model tuning.

While the official documentation may lag slightly behind the rapid development, the community announcements provide valuable, up-to-date information. Users looking for the latest features and configurations should check both the official docs and friendly community discussions to find what they need.

Deployment options for ChatUI are flexible but require technical expertise. It can be quickly deployed using Docker - and comes with the option of an embedded MongoDB making running a local instance straightforward. A one-click deployment option is available through HuggingFace Spaces, but this still requires users to be comfortable with configuring applications and setting up HuggingFace-hosted models. There is also a nascent Helm configuration for those looking for internet-scale deployments.

HuggingFace ChatUI stands out for its shareable Assistants feature and clean, responsive front-end. It requires technical know-how to set up and fully utilize. It’s an excellent choice for developers and power users - particularly those who prioritize model diversity and assistant customization. The end user experience is also blisteringly quick, and provides a good starting point for customisation.

LibreChat (v0.7.4-rc1)

LibreChat LibreChat Github LibreChat Demo

LibreChat offers a comprehensive, open-source alternative to proprietary chat interfaces, balancing features for both casual and power users. It offers a ChatGPT-like interface, with

| At a glance | ||

|---|---|---|

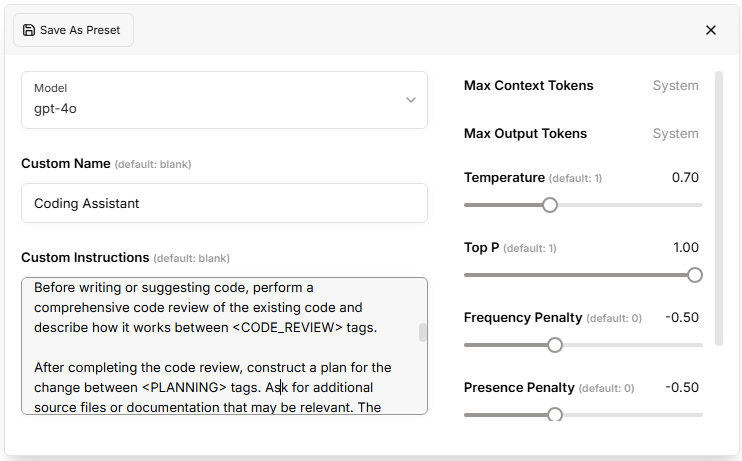

| ▲ OpenAI Assistants Support | ▲ Multimodal Chat | ▲ Tunable Custom Presets |

| ▲ Web Search Plugins | ▲ Enhanced Conversation Branching | ▲ Mid-Chat Model Switching |

| ▲ Multilingual Support | ▲ OIDC and LDAP/AD Auth | |

| ▼ No Mermaid/SVG Support |

LibreChat Preset Configuration

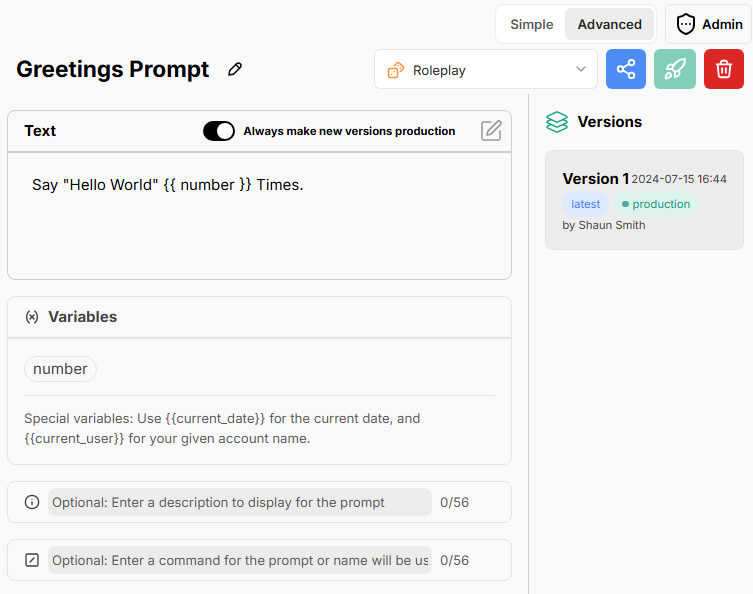

The Prompt Library feature is wonderful. It allows creating and sharing standard prompt templates. When customised with a variable, it requests User enter the necessary values and submits the prompt. This is a simple, flexible way to encourage re-use and set up repeatable workflows. LibreChat Custom Prompt

Detailed token usage is tracked, however access to the information is currently through the command line. There is also nascent RAG functionality built in, with file capture and search - however this is still a work-in-progress.

User Management and Authentication Integration is well thought through, offering seamless for enterprise deployment scenarios.

Another good front-end with similar feature set worth evaluating is Open WebUI

Mintplex AnythingLLM (v1.5.8)

AnythingLLM AnythingLLM Github

| At a glance | ||

|---|---|---|

| ▲ Easy to set up RAG Search | ▲ Cost Control Features | ▲ Comprehensive Agent Support |

| ▲ Document Ingest (inc. GitHub) | ▲ Workspaces | ▲ Embeddable Chat Widgets |

| ▲ Workgroup User Management | ||

| ▼ No OIDC/OAuth Authentication | ▼ No Cloud Drive Integration | ▼ No Multi-Modal Image Support |

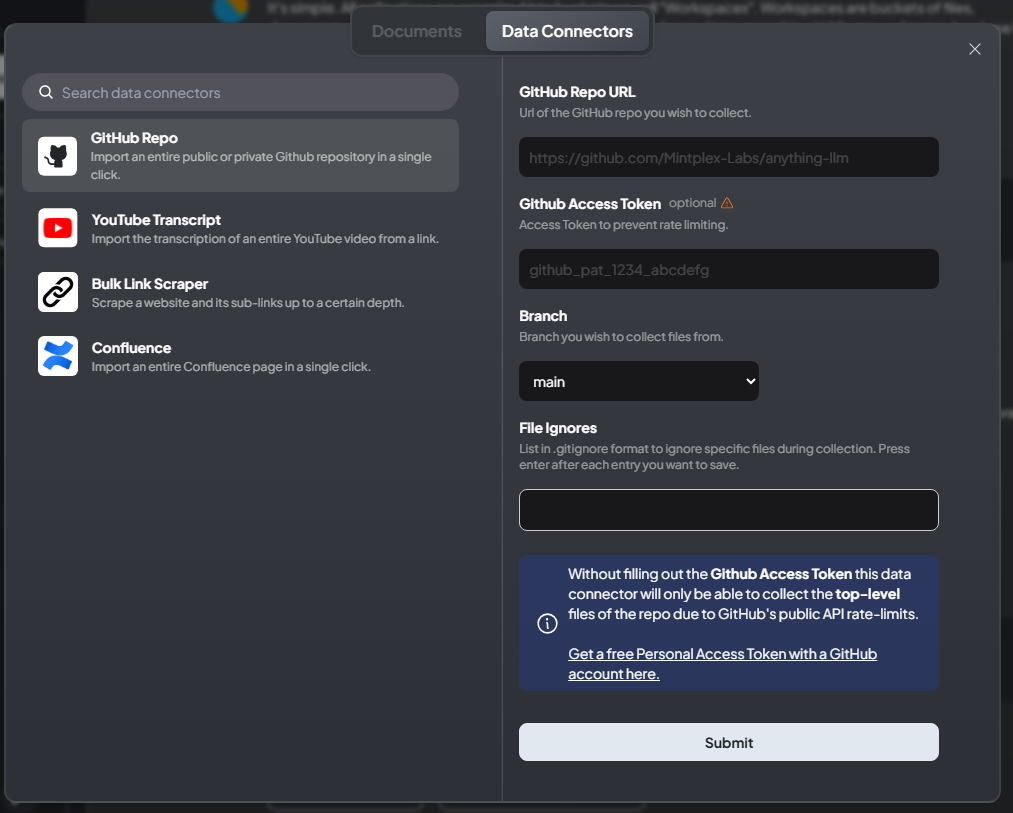

AnythingLLM’s standout feature is it’s ability to ingest documents, and set up workspaces that enable access to RAG features. Coupled with it’s inbuilt User Management, this is a compelling workgroup tool for Content Creation and Analysis. Compared with Claude Projects, AnythingLLM supports both direct document insertion in to the context as well as a RAG Search, increasing the size of knowledge bases that can be incorporated. The Data Ingest and management features are easy to use, and allow direct import from GitHub and Confluence as well as from the local file system.

AnythingLLM Connectors

AnythingLLM offers flexibility deployment and installation options. A Desktop Download option is offered, making single user usage simple. Self-Hosted Cloud Deployment templates are provided for multiple providers including AWS and Google Cloud Platform. Further, a managed options is also available for people who need multi-user functionality without the setup.

Language and Embedding Model support is comprehensive, with comprehensive support for both Local and API hosted models.

Overall, AnythingLLM presents itself as a robust, feature-rich platform that balances powerful AI capabilities with user-centric design. It’s particularly well-suited for businesses and organizations looking to implement a comprehensive, customizable AI chat solution with strong document analysis capabilities.

Other Open Source RAG interfaces that are worth evaluating are DAnswer, RAGFlow and Verba.

Conclusion

The AI chat interface landscape is evolving rapidly, with innovation spanning both commercial and open-source offerings. Each solution we’ve examined offers unique features catering to diverse needs, from individual power users to enterprise teams.

Individuals and Workgroups

Open-source solutions like Big-AGI, HuggingFace ChatUI, and LibreChat offer unparalleled flexibility and feature richness. These platforms not only allow for local model inference but also provide the freedom to switch between different model providers, future-proofing your AI workflow. They are lightweight enough to run on a laptop for a single user.

Commercial offerings like Claude and ChatGPT continue to innovate, providing powerful, user-friendly interfaces with unique features like Claude’s Artifacts or ChatGPT’s integrated data analysis tools.

Solutions like Big-AGI’s web interface and AnythingLLM’s desktop application are bridging the gap, offering advanced features with minimal setup requirements.

Enterprises

When considering AI chat solutions for larger organizations or teams, additional factors come into play:

- Scalability and user management capabilities

- Data privacy and security features

- Integration with existing tools and workflows

- Total cost of ownership, including setup and maintenance

- Flexibility to adapt to evolving AI models and technologies

Open-source solutions offer extensive customization and control, ideal for organizations with strong technical resources. Commercial offerings provide robust, user-friendly interfaces with enterprise-ready features, suitable for quick deployment and minimal technical overhead. Hybrid options like AnythingLLM bridge the gap, offering advanced features with simplified setup. While AI deployment is still in its early stages, this approach offers a cost-efficient way to explore business needs, capabilities, and options.

Using Natural Language AI

The ability to interact with powerful AI models using natural language in an open-ended chat format is unprecedented. This accessibility opens up new opportunities in complex problem-solving, task definition, and creativity.

For individuals: Start with a solution that matches your technical comfort level. Experiment with different interfaces to find what best enhances your workflow. Open-source options like Big-AGI offer a low-barrier entry point for exploring advanced features.

For enterprises: Begin with a pilot project. Test a few solutions with a small team before scaling up. Consider factors like integration with existing systems, data privacy, and long-term scalability. Commercial offerings like Claude or ChatGPT may offer quicker deployment, while solutions like AnythingLLM provide a balance of customization and ease of use.

The key is to start engaging with these tools, learning their capabilities, and finding ways to apply them effectively in your specific context. The chat interface, augmented with innovative tooling is opens up endless possibilities.