OpenAI GPT Suite - Casual Performance (Jul 24)

Introduction

OpenAI’s model lineup has changed recently. As of early July 2024, their API pricing page showcases two primary models: GPT-4o and GPT-3.5 Turbo, with GPT-4 and GPT-4 Turbo now in the “Older Models” section.

OpenAI’s FAQ offers this guidance:

Use GPT-4o for complex tasks that require advanced reasoning, creativity, or in-depth analysis. Use GPT-3.5 Turbo for simpler tasks like content generation, summarization, or straightforward Q&A.

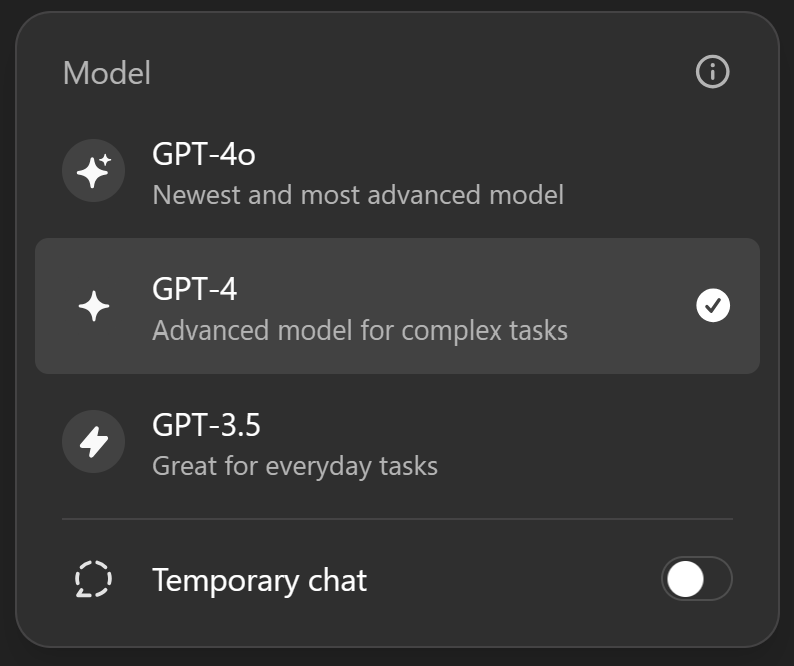

Interestingly, GPT-4 still features prominently on the consumer ChatGPT interface, advertised for complex tasks. Given this, we’ll include it in our comparison.

ChatGPT Model Selection

To evaluate these models’ performance in real-world scenarios, we’ll use our “Casual Prompt” test. This simulates how a well-informed, casual user might interact with these models, providing insights into their practical capabilities and limitations.

Our test will assess the following models:

| Model Name | API Name | Cutoff Date | Input Price (m/tok) |

Output Price (m/tok) |

|---|---|---|---|---|

| GPT-3.5 Turbo | gpt-3.5.turbo-0125 | Sep 2021 | $0.50 | $1.50 |

| GPT-4o (Omni) | gpt-4o-2024-05-13 | Oct 2023 | $5.00 | $15.00 |

| GPT-4 | gpt-4-01613 | Sep 2021 | $30.00 | $60.00 |

Information correct at publication date of 03 July 2024

It’s notable that two of these models now have a knowledge cutoff date approaching three years old.

Test Setup

The test will be run with default parameters, using the API default temperature of 1. OpenAI advise tuning this for creativity vs. consistency, however we will use the default as we did for Anthropic tests.

OpenAI Temperature Documentation

Benchmark Details

Our casual prompting benchmark evaluates how AI models interpret everyday queries without extensive prompt engineering. The test asks models to score a technical article’s suitability for two personas: Alice (a technical expert) and Bob (a non-technical business user), on a scale of 1-10. We run this test 50 times for each model, both with an empty system prompt and with “You are a helpful AI assistant” as the system prompt. The difference between Alice’s and Bob’s scores indicates how well the model distinguishes the personas’ needs, with a larger gap (ideally 3-5 points) suggesting better performance. This approach helps assess the models’ real-world applicability and reliability in common use scenarios.

Test Results

Presented below are the Score Difference results for the OpenAI suite both with and without a system prompt.

Click the buttons below to compare models with and without system prompt:

Score Differences - All Scenarios

| Frequency | -1 | 0 | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|---|

| GPT-3.5 (Empty) | 49 | 0 | 0 | 0 | 1 | 0 | 0 |

| GPT-3.5 (Helpful) | 0 | 0 | 0 | 50 | 0 | 0 | 0 |

| GPT-4o (Empty) | 0 | 0 | 0 | 0 | 2 | 47 | 1 |

| GPT-4o (Helpful) | 0 | 0 | 0 | 0 | 21 | 29 | 0 |

| GPT-4 (Empty) | 0 | 0 | 0 | 0 | 1 | 45 | 4 |

| GPT-4 (Helpful) | 0 | 0 | 0 | 0 | 0 | 14 | 36 |

We’ll briefly cover Token Usage before diving deeper in to the scores.

Token Usage

Click the buttons below to compare models with and without system prompt:

Completion Tokens - All Scenarios

| Model & Prompt | 0-50 | 51-100 | 101-150 | 151-200 | 201-250 | 251+ |

|---|---|---|---|---|---|---|

| GPT-4o (Empty) | 46 | 0 | 0 | 4 | 0 | 0 |

| GPT-4o (Helpful) | 48 | 0 | 0 | 2 | 0 | 0 |

| GPT-3.5 (Empty) | 50 | 0 | 0 | 0 | 0 | 0 |

| GPT-3.5 (Helpful) | 50 | 0 | 0 | 0 | 0 | 0 |

| GPT-4 (Empty) | 50 | 0 | 0 | 0 | 0 | 0 |

| GPT-4 (Helpful) | 50 | 0 | 0 | 0 | 0 | 0 |

Output is terse across all the models, typically returning just the scores. Token usage is consistent across the suite, with only GPT-4o showing any deviation.

| Model | Sys Prompt | Mean | Median | Min | Max | Std Dev |

|---|---|---|---|---|---|---|

| GPT-3.5 | Empty | 11.00 | 11.00 | 11 | 11 | 0.00 |

| Helpful | 11.00 | 11.00 | 11 | 11 | 0.00 | |

| GPT-4o | Empty | 29.68 | 11.00 | 11 | 187 | 51.35 |

| Helpful | ▼ 17.58 | 11.00 | 11 | ▼ 176 | ▼ 32.56 | |

| GPT-4 | Empty | 11.00 | 11.00 | 11 | 11 | 0.00 |

| Helpful | 11.00 | 11.00 | 11 | 11 | 0.00 |

GPT-4o occasionally provides an explanation of its scoring; the inclusion of a system prompt seems to lower the probability of that occurring. An example of one of those outputs is below:

ALICE_SCORE=7

BOB_SCORE=3

### Explanation:

**Alice:**

- The article provides a clear and concise overview of the issue at hand.

- It uses industry-specific terminology (e.g., BGP, ISOC, FCC) that Alice would be familiar with.

- It lays out arguments logically and directly.

- However, it includes some narrative context and broader implications that might be considered extraneous by Alice, hence not a perfect 10.

**Bob:**

- The article dives into technical specifics without simplifying them for a non-technical audience.

- It lacks analogies or simplified explanations to make the technical content accessible.

- The reasoning behind some decisions (like ISOC's stance) is not broken down in a way that Bob would appreciate.

- Therefore, while informative, it does not present the Big Picture in a way that Bob prefers.The GPT models appear to treat the “Report the scores in this format:” part of the prompt as a strict instruction.

In embedded or automated scenarios that expect this behaviour from OpenAI models, GPT-4o’s occasional verbosity might lead to unforeseen issues. Depending on the task, this could cause failures that a simple retry would likely resolve.

Score Analysis

The scoring performance of OpenAI’s GPT models shows some interesting and unexpected patterns.

| Model | Sys Prompt | Alice Score | Bob Score | Difference |

|---|---|---|---|---|

| GPT-3.5 | Empty | 7.04 | 8.02 | -0.98 |

| Helpful | ▲ 8.00 | ▼ 6.00 | ▲ 2.00 | |

| GPT-4o | Empty | 7.98 | 4.00 | 3.98 |

| Helpful | ▼ 7.00 | ▼ 3.42 | ▼ 3.58 | |

| GPT-4 | Empty | 9.98 | 5.76 | 4.22 |

| Helpful | ▼ 8.72 | ▼ 4.00 | ▲ 4.72 |

GPT-3.5 Turbo unexpectedly favours Bob over Alice without a system prompt, suggesting a misinterpretation of personas or content. The “Helpful AI Assistant” prompt corrects this, resulting in an appropriate 2-point difference favouring Alice.

GPT-4o consistently differentiates between Alice and Bob, with or without the system prompt. Bob’s scores (3-4 range) align well with expectations, while Alice’s scores, though slightly low, indicate good suitability. The system prompt narrows the score gap, lowering both suitability scores, suggesting a more conservative approach.

GPT-4 shows the highest differentiation, especially with the system prompt. It rates the article as highly suitable for Alice, often exceeding expected ranges. Without a system prompt, it produced four outlier score differences of 7 (Alice=10, Bob=3). While the differentiation score is impressive, the individual suitability scores seem overstated - especially pronounced with no system prompt.

Removing the 4 outlier scores without the system prompt returns a mean score difference of 3.98 - matching GPT-4o, but with markedly different baseline scores for the personas.

Despite GPT-4’s high differentiation, GPT-4o’s more moderate, well-aligned scores may be more practical in real-world applications. This highlights that the difference alone isn’t enough to gauge usefulness in this scenario.

Testing Costs

| Model | Sys Prompt | Output Tokens | Input Cost | Output Cost | Total Cost |

|---|---|---|---|---|---|

| GPT-3.5 | Empty | 550 | $0.0188 | $0.0008 | $0.0196 |

| Helpful | 550 | $0.0189 | $0.0008 | $0.0197 | |

| GPT-4o | Empty | 1,484 | $0.1865 | $0.0223 | $0.2088 |

| Helpful | 879 | $0.1880 | $0.0132 | $0.2012 | |

| GPT-4 | Empty | 550 | $1.1250 | $0.0330 | $1.1580 |

| Helpful | 550 | $1.1340 | $0.0330 | $1.1670 |

For this test, the OpenAI GPT suite has been parsimonious in output token usage, running the tests cost-effectively and efficiently. GPT-4 pricing stands out here, especially as it is listed as a legacy model - and in our view was outperformed by GPT-4o at under a fifth of the cost.

Conclusion

GPT-4o, launched on May 13, 2024, shows improved performance in our casual prompting test, effectively differentiating between technical and non-technical audiences. However, the OpenAI GPT suite presents several noteworthy aspects:

-

Knowledge currency: GPT-3.5 Turbo and GPT-4 have cutoff dates approaching three years old (September 2021), showing the models relative age. Although GPT-4 Turbo has a more recent cut-off (Oct 2023), it is described as “previous” and replaced by GPT-4o, with GPT-3.5 Turbo advertised for fast, inexpensive simple tasks. GPT-4 Turbo is also priced at double the cost of GPT-4o.

-

Performance spectrum: GPT-3.5 Turbo’s weak performance, especially without a system prompt, highlights an issue in OpenAI’s current lineup. Although advertised and intended for use as an inexpensive task model, it is no longer competitive at this age and price point. Our view is that GPT-4o also outperformed GPT-4 in this task, producing more consistent usable scoring.

-

Pricing strategy: GPT-4’s high cost is difficult to justify given its age and GPT-4o’s superior performance at a fraction of the price.

OpenAI’s GPT-4o announcement focussed on their multi-modal innovation, offering several impressive demo’s of low-latency audio and visual capabilities alongside core improvements to reasoning and performance. However these features have been subject to delays, and are potentially coming at the expense of refreshed text-generation models. The current advertised line-up is confusing in distinguishing their price/performance/currency offerings.

The next few months promise to be exciting in the AI landscape. With new releases expected from Anthropic and the continued evolution of open-source models like Qwen 2, Llama 3, and Mistral/Mixtral, competition in the field is intensifying. These developments, alongside potential updates from OpenAI, could significantly reshape the performance and pricing dynamics in the AI model market.