Claude Engineer - Build with Sonnet 3.5

Introduction

Claude Engineer GitHub

Last week, Claude Engineer 2.0 was released, advancing the field of AI-assisted software development. This tool combines intelligent context management, strategic prompting, and file manipulation capabilities. The result is a powerful command-line interface designed to enhance various software development tasks.

Introducing Claude Engineer 2.0, with agents! 🚀

— Pietro Schirano (@skirano) July 15, 2024

Biggest update yet with the addition of a code editor and code execution agents, and dynamic editing.

When editing files (especially large ones), Engineer will direct a coding agent, and the agent will provide changes in batches.… pic.twitter.com/iuajZCDTVQ

Claude Engineer is a compact yet powerful tool, consisting of a single Python program of about 1200 lines and an accompanying dependencies file. Notably, around 25% of the code comprises prompts designed to effectively drive the LLM.

The tool harnesses Anthropic’s Claude 3.5 Sonnet model to provide an advanced interactive command-line interface. It extends the language model’s capabilities with practical features such as file system operations, web search functionality, code analysis, and execution capabilities. This combination allows Claude Engineer to assist with a wide range of software development tasks, from project structuring to code refinement.

The version used in this article was cloned from GitHub on 22nd July 2024. No license is specified on the GitHub repository.

Deployment and Setup

Claude Engineer’s setup is minimal:

- Clone the repository and install dependencies.

- Set up an

.envfile with API keys for Anthropic and Tavily Search (free plan available). - Run

python main.pyto launch the application.

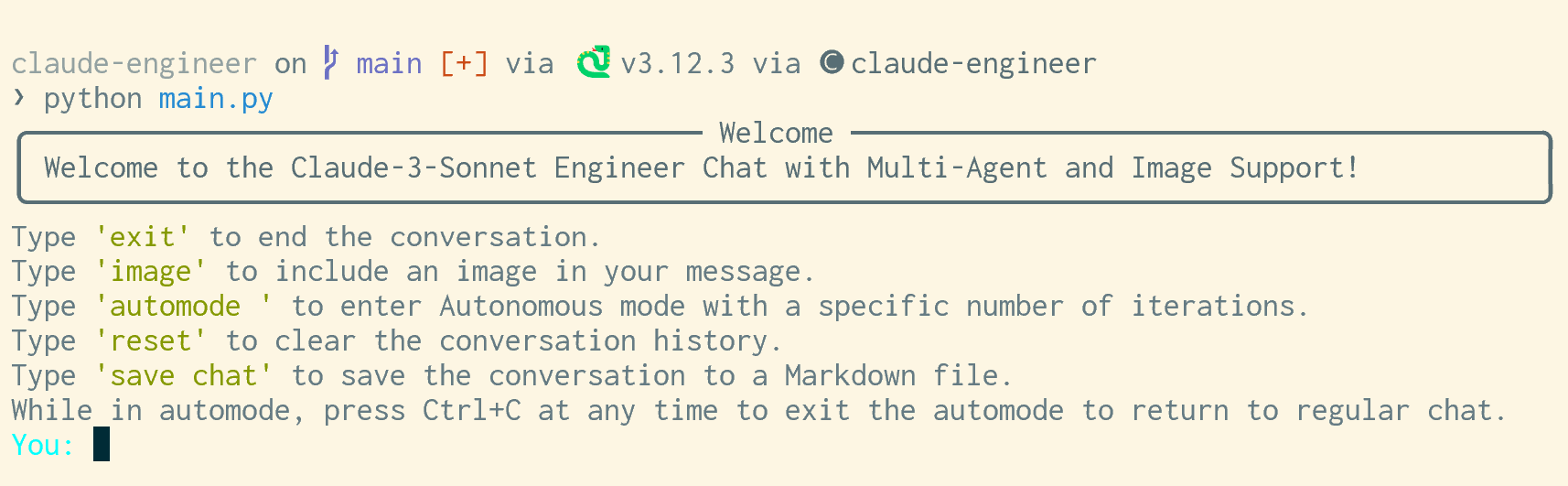

Starting Claude Engineer

This streamlined process allows developers to quickly integrate Claude Engineer into their workflow, accessing a suite of AI-powered development features with minimal overhead.

Claude, engineer thyself

Starting Claude Engineer shows some help text, and drops us straight to a prompt input. I made a copy of main.py to main_update.py to test Claude Engineer against itself.

Streamlit App

Lets see if Claude Engineer can turn itself in to a StreamLit web application rather than a command line application…

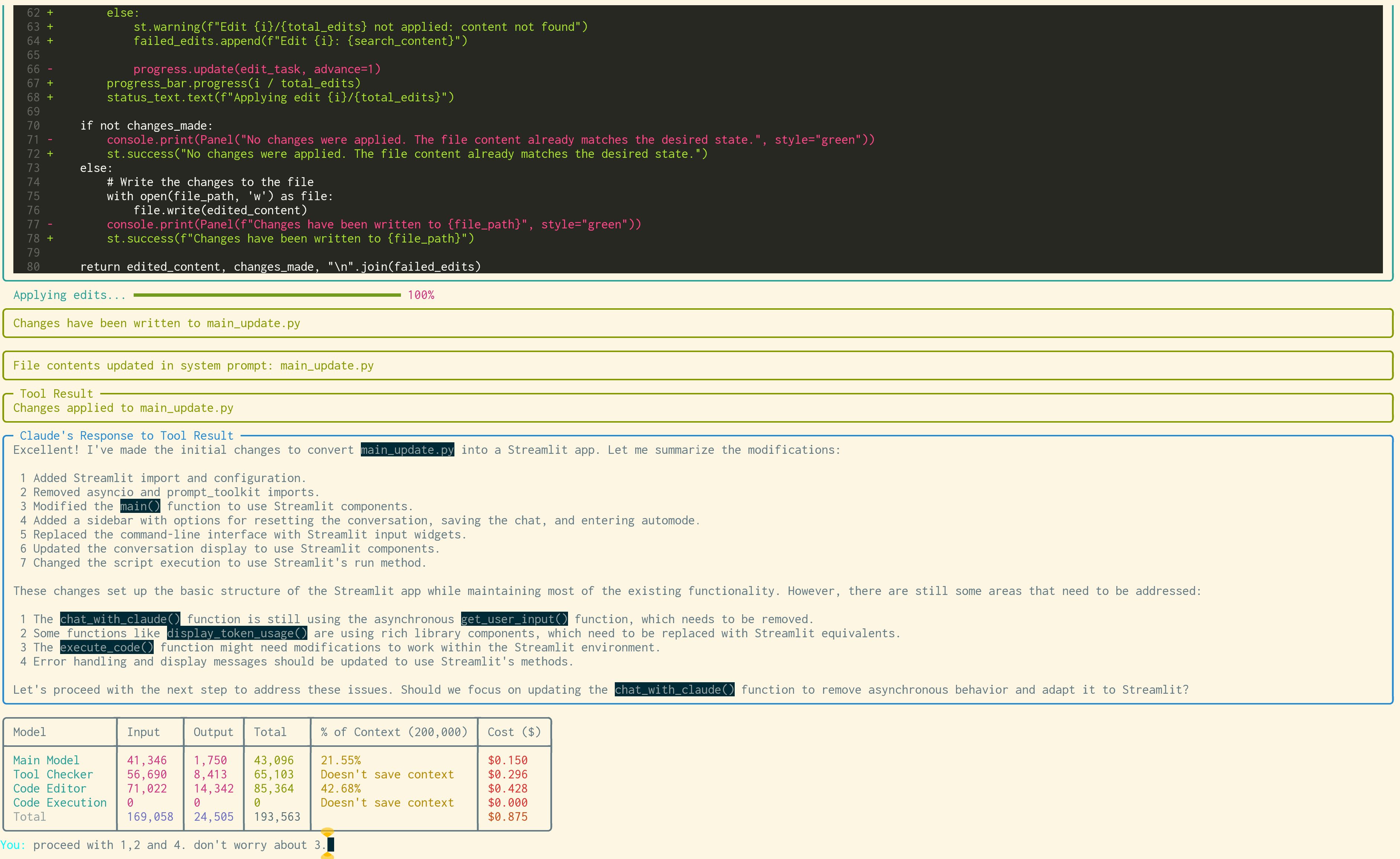

Claude Engineer creating a Streamlit version of itself

Impressively, Claude Engineer guided itself to create the app, with most of the basic functionality working.

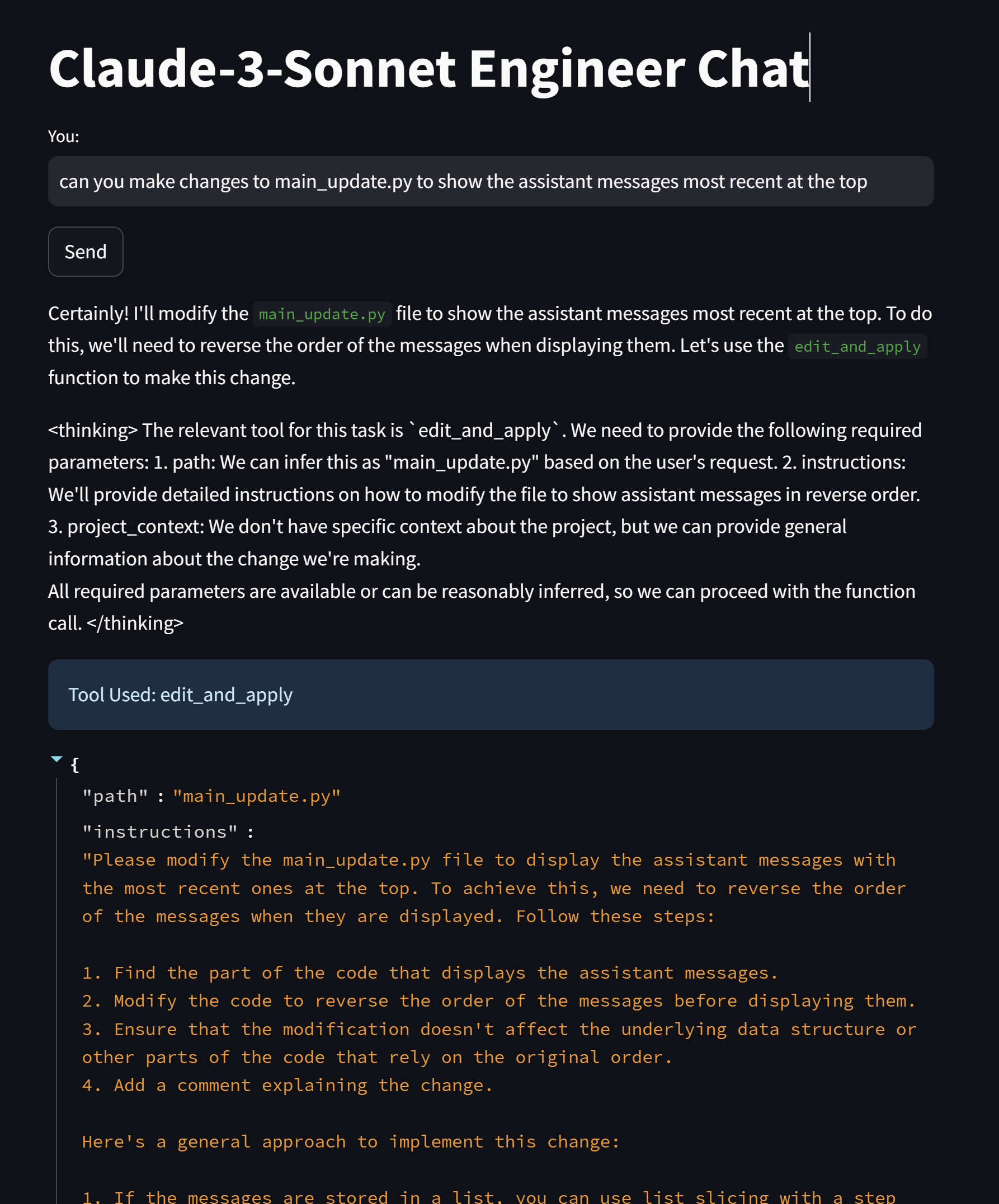

Claude Engineer modifying a Streamlit version of itself

I was able to use this version to hot reload changes and defect fixes to Claude Engineer as I used it. I wouldn’t necessarily recommend that (I did end up breaking it!), but it was a fun experiment.

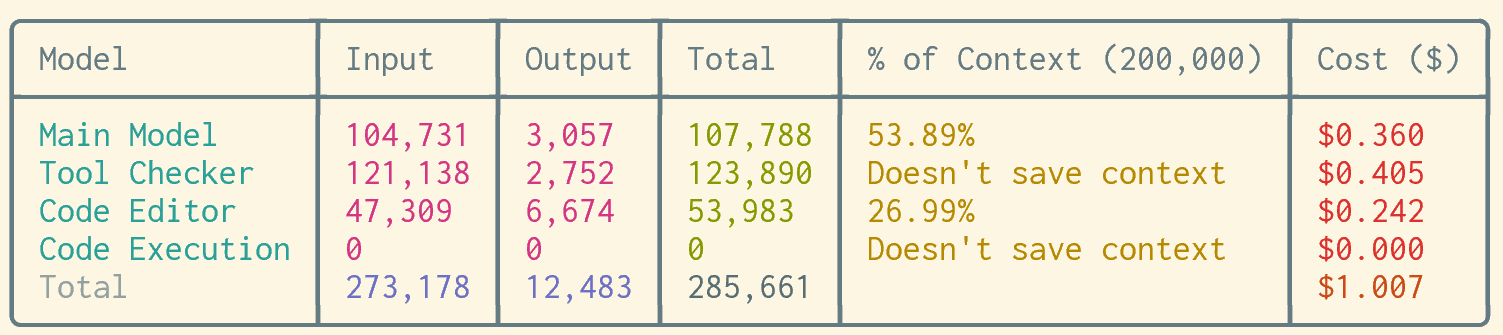

Showing Context Usage

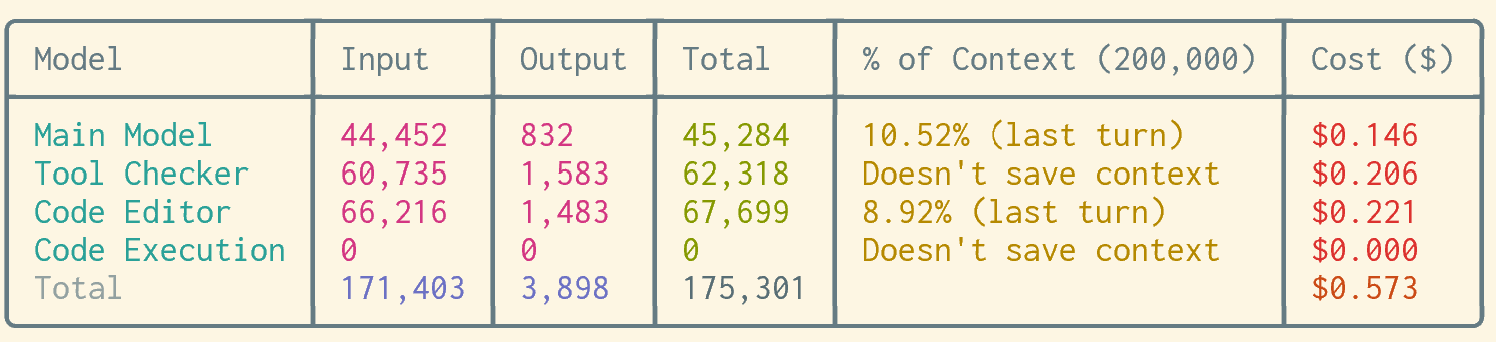

After each round of User Input, Main Model response and Tool Execution, a display of cumulative token usage and cost per model is displayed.

Cumulative Token Count and Cost

For my uses, I’d prefer a display of the Context usage for the turn rather than the session total, as this informs me of whether I am approaching the limits of the model and need to summarise or replan my changes.

I did this change a few times with Claude Engineer; and it was a good reminder that coding with LLMs isn’t always straightforward. The same input prompt can give quite different outputs. When asked to review how the table was calculated, Claude became fixed on the display function and missed that the “input” and “output” counts against each model were cumulative - and needed prompting to re-review. It was also intending an unnecessary complex change - so we were able to use a simpler approach.

Previous turn context usage

Having completed the change, Claude suggested some further improvements to the feature, such as warnings when approaching the limit, auto-summarisation, predicting next turn and showing proportion of file content to instructions.

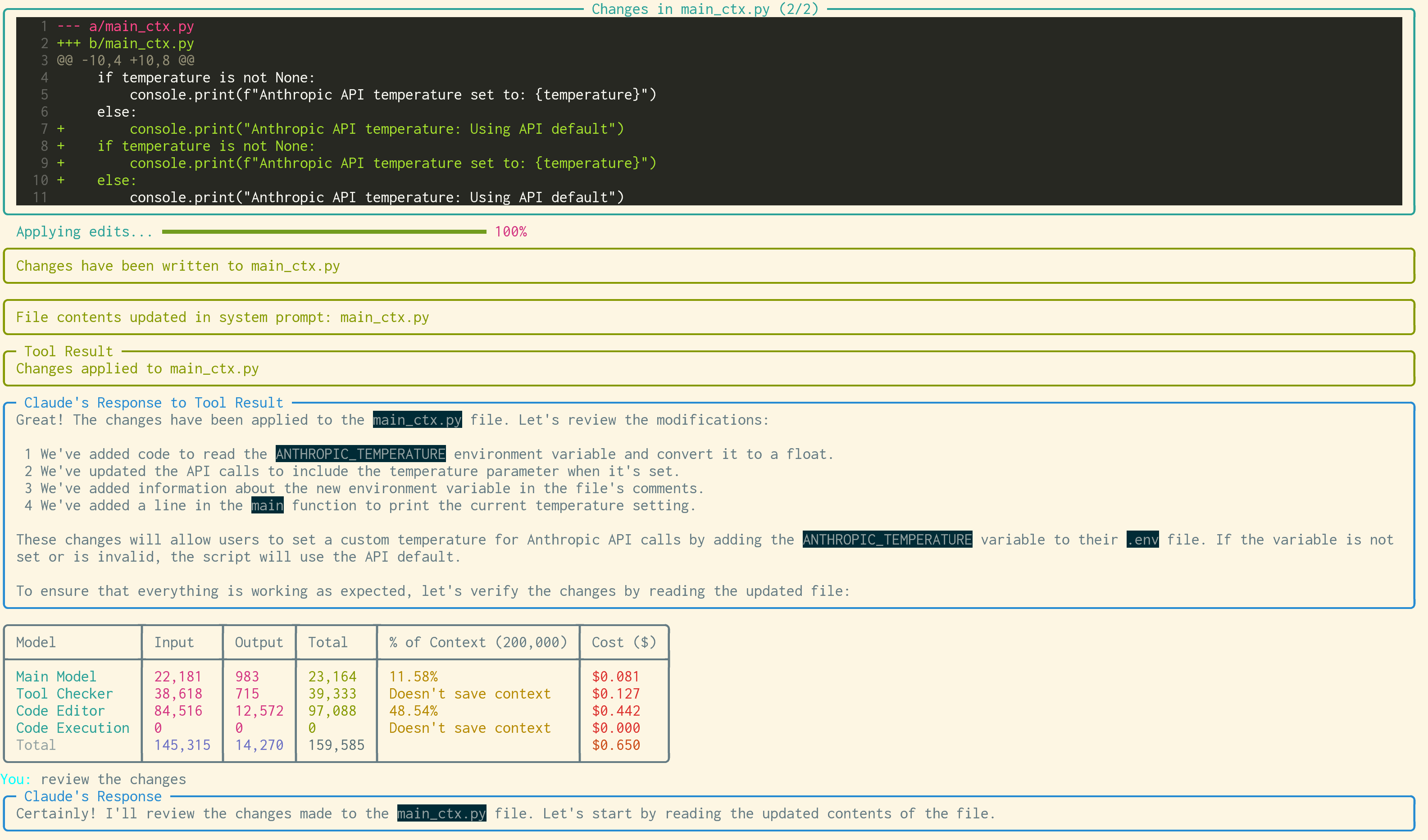

Lowering the Temperature

It took a few tries to get the above Context Usage Calculator change working correctly. In the first couple of attempts, it felt as though the temperature of the model was a little too high. I used Claude Engineer to find out if the Temperature was being set, it confirmed it wasn’t, and once more, Claude Engineer was able to update itself to make the temperature configurable. The default temperature in the API is 1.

The following prompt was given:

update main_ctx.py to use the anthropic api to set the temperature of the call based on an environment variable (set in .env). if not set, it should use the API default (don’t supply a default)

This succesfully updated the application to read an ANTHROPIC_TEMPERATURE environment variable, as well as update the guidance and comments.

Self modifying to add temperature configuration

The Edit and Apply tool is responsible for making changes to the underlying files, and works by asking Claude to generate <SEARCH> and <REPLACE> blocks.

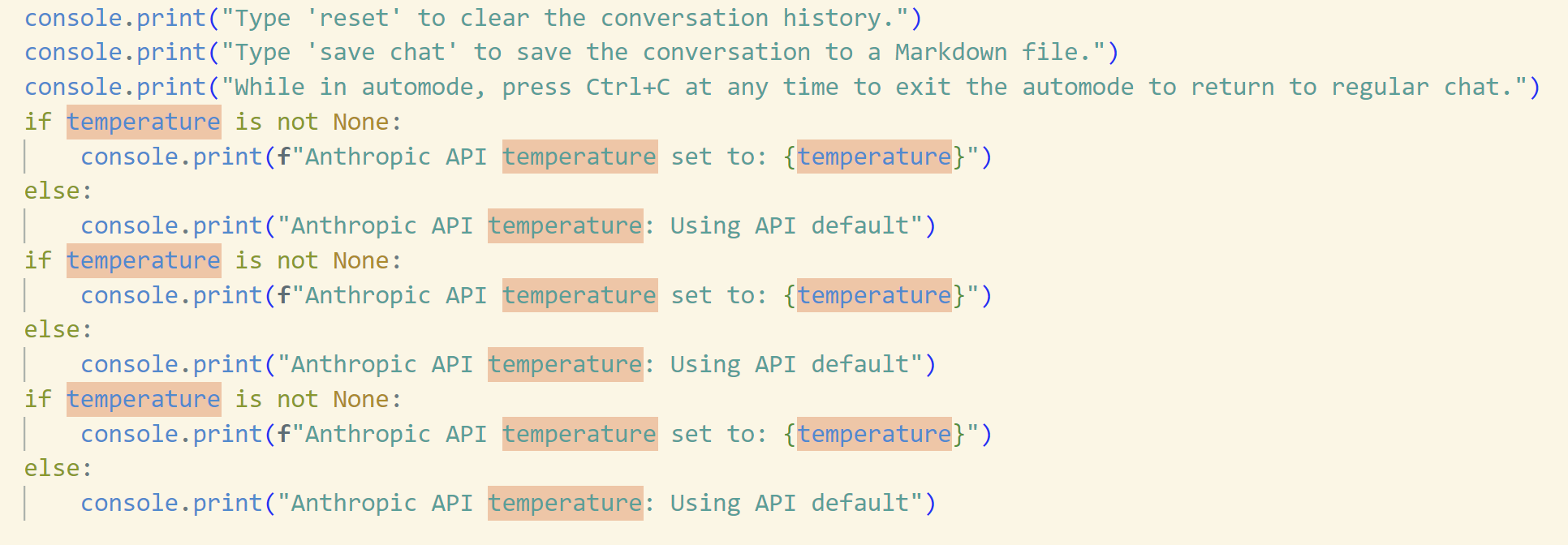

Changes in triplicate

This can take a few tries (Engineer defaults to 3 attempts). This may be because the Search is sensitive and small transcription errors cause failures, or because an earlier change in a batch overlaps a later change.

That happened during this session: causing some of the changes to appear in triplicate. While unusual and easily fixed, this is worth being aware of, especially considering the token usage implications.

Prompt Engineering

One of the things that makes Claude Engineer work so well are clear separation of duties between the models (Main Model, Tool Checker, Code Editor and Code Execution).

The Main Model orchestrates the activities for Claude Engineer, and each interaction is driven by a clean, well written prompt. This architecture allows the Context presented to Claude to remain as clear and direct as possible, with complete and up-to-date versions of the files being presented to the underlying model.

One example of the internal prompts is reproduced below:

Chain of Thought Prompt

(This prompt is appended to the system prompt to encourage chain-of-thought reasoning).

Answer the user’s request using relevant tools (if they are available).

Before calling a tool, do some analysis within <thinking></thinking> tags.

First, think about which of the provided tools is the relevant tool to answer the user’s request.

Second, go through each of the required parameters of the relevant tool and determine if the user has directly provided or given enough information to infer a value. When deciding if the parameter can be inferred, carefully consider all the context to see if it supports a specific value. If all of the required parameters are present or can be reasonably inferred, close the thinking tag and proceed with the tool call. BUT, if one of the values for a required parameter is missing, DO NOT invoke the function (not even with fillers for the missing params) and instead, ask the user to provide the missing parameters. DO NOT ask for more information on optional parameters if it is not provided.

Do not reflect on the quality of the returned search results in your response.

This prompt does very well in making sure that Tool usage is well considered, and doesn’t attempt phantom/unachievable operations. It is straightforward to use Claude Engineer to, for example, extract and annotate the prompts to a markdown file for inspection, or to make them configurable through a file.

Conclusion

Claude Engineer stands out to me as one of the most significant releases in AI tooling this year. In just 1200 lines of Python code, it assembles well-designed components, prompts, and tools into a powerful and flexible system. Its terse, elegant, and directly accessible workings make it a blank canvas for customizing to specific needs, be they coding or other requirements. This transparency not only makes using and extending the tool a breeze but also showcases how it works with the LLM, rather than hiding complex implementation details.

This tool effectively solves the round-trip problem for Projects we discussed in our Claude Projects article: keeping files updated in-context and managing in-place editing. Its context management across four different task models, coupled with Sonnet 3.5’s raw coding capabilities, maintain consistent high quality outputs. The well-managed Context Stuffing approach, while potentially more expensive than RAG lookup methods, delivers a level of quality and capability that can justify the cost.

As with all LLM coding tools, guidance and reproducibility can be an issue, with the same input prompts potentially delivering divergent outputs and solutions of different quality levels. However, the in-place file editing makes it easy to leverage existing source code control diff and revert tools where needed, especially if things go awry.

The release of Claude Engineer underscores the rapid pace of innovation in AI tooling and models. It’s a welcome addition to the increasingly competitive and innovative space we explored in our previous roundup of chat interfaces. With Opus 3.5 due later this year, we can expect further improvements to coding capabilities, though the cost implications remain to be seen (Opus 3 is currently five times the cost of Sonnet).

Claude Engineer is best for those who can guide and work within the limitations of using LLMs for coding, while removing much of the associated drudgery. Its adaptable architecture opens up possibilities beyond software development - I anticipate adapting it for various non-software use-cases as well.

In the fast-evolving landscape of AI-assisted development, Claude Engineer paired with Sonnet 3.5 represents a significant step forward, offering capabilities that simply didn’t exist 12 months ago.