ChatGPT and Claude.ai - Chat Productivity Techniques

Introduction

This guide provides practical tips for managing chat conversations with ChatGPT, Claude, and similar services. It focuses on techniques that can significantly improve the accuracy and relevance of responses, especially in longer conversations.

Getting the best results involves more than just prompt engineering. Effective chat management is crucial for conducting complex queries and tasks, and generates more relevant, higher quality responses.

Understanding Context Windows

AI models have a limit to how much conversation history they can consider, known as the context window. The window is measured in tokens, which are units representing parts of words or punctuation.

Here’s a comparison of context window sizes for some current models:

| Service (Model) | Knowledge Cutoff | Context Window (Tokens) | Words | Book Pages |

|---|---|---|---|---|

| claude.ai - Sonnet 3.5 | Apr ‘24 | 200,000 | ~150,000 | ~500 |

| ChatGPT GPT-4o | Oct ‘23 | 128,000 | ~96,000 | ~320 |

| meta.ai Meta Llama 3.1 | Dec ‘23 | 128,000 | ~96,000 | ~320 |

Both the length and quality of the conversation affect the AIs ability to produce sharp, coherent responses1. Long, poorly managed conversations can lead to overly generalized responses, failure to recall earlier parts of the conversation or inability to follow instructions.

Fortunately, there are a number of strategies that can be used to optimize chat interactions, maintain conversation quality, and make the most of the available context window. The following tips explore these techniques in detail, helping you to enhance the effectiveness and efficiency of your AI-assisted tasks.

Managing Chats

1. Start Fresh for Each Topic

Begin a new chat session for each distinct topic or task. This keeps the context window focused, resulting in more precise and relevant responses. For example, if you’re working on a marketing strategy in one chat and then need to analyze financial data, start a new chat for the financial task. This prevents the AI from mixing concepts or trying to find connections between unrelated topics.

Each new chat starts with a clean slate, allowing the AI to concentrate fully on the current subject without interference from previous conversations2.

2. Front-load Context and Instructions

When starting a new chat, begin by introducing important long-form content that you want the AI to reference throughout the conversation. This could include product descriptions, company policies, or relevant background information.

Provide this content in larger blocks rather than piecemeal. Avoid interleaving instructions or questions between content sections, as this can confuse the AI about what is instruction and what is reference material.

When introducing substantial blocks of content, place your specific instructions or queries towards the end of your message. This ensures that the AI has all the necessary context before processing your requests.

For example:

Here's our company's new product description:

[Insert full product description]

Here's our target market analysis:

[Insert complete market analysis]

Based on this information, please suggest three key marketing strategies for our

product launch.This approach helps the AI maintain a clear distinction between reference material and tasks, leading to more focused and accurate responses.

3. Use Delimeters

For more intricate conversations or when dealing with multiple data sources, using delimiters can help organize your input more effectively. This advanced technique is particularly useful for complex tasks or when you need to clearly separate different types of information.

Use delimiters such as triple quotes (""") or XML-style tags (<TAG>...</TAG>) to structure your prompts and separate different parts of your input. This approach aids the AI in distinguishing between various elements of your request, leading to more precise and organized responses.

Claude.ai responds particularly well to XML-style tags, as that is what it has been trained on. Similar techniques also work with ChatGPT.

For example:

We will be analyzing consumer segments and social media strategies for the following

products:

<products>

<product name="xyz">

<datasheet>

[Insert product XYZ details here]

</datasheet>

</product>

<product name="abc">

<datasheet>

[Insert product ABC details here]

</datasheet>

</product>

</products>

Please provide a comparative analysis of the target consumer segments for these

roducts, considering their unique features and potential social media appeal.This structured approach helps the AI maintain a clear distinction between different data sources and instructions, leading to more focused and accurate responses in complex scenarios.

4. Batch Instructions

In long conversations with AI models, the number of back-and-forth exchanges significantly impacts efficiency. Each time you send a message, the AI processes the entire conversation history anew. This means that frequent, short exchanges are inherently inefficient, as the AI repeatedly reprocesses similar information.

Claude.ai long chat messages

To optimize your interactions, particularly in complex discussions, group related instructions or questions into a single, well-structured message. For example:

Let's analyze the current state of electric vehicles (EVs). Please address the

following points:

1. Summarize the top 3 bestselling EV models globally in the past year.

2. Explain the main challenges facing widespread EV adoption.

3. Describe recent innovations in battery technology that could impact the EV market.

4. Predict how the EV market share might change in the next 5 years.

For each point, provide 2-3 sentences of explanation and, where applicable,

include relevant statistics or data points.This batching technique offers a key advantage: it keeps all instructions together, preventing them from being interspersed with various input and output messages. This coherent structure makes it easier for the AI to maintain focus and produce more consistent, interconnected responses. It reduces the risk of the AI losing track of earlier points or context as the conversation progresses.

When batching instructions, consider numbering your questions, providing specific guidance on the desired response format, and grouping related tasks together. This approach not only conserves resources and quotas but also tends to yield more comprehensive and coherent results, especially in complex, multi-faceted discussions. Also consider the sequence of instructions: - if later instructions would benefit from the result of an earlier one, put them in that order (guided Chain of Thought).

5. Explicitly Reference Previous Information

In long conversations, AIs may struggle to identify the most relevant information from earlier exchanges. To ensure the AI focuses on the right context, explicitly refer to specific points or documents you’ve previously discussed or shared.

When referencing earlier information, be as specific as possible. For example, "Referring to the product data sheet I shared at the start of our conversation, please update the second bullet point under 'Key Features'" is more effective than a vague request to “update the features”. This specificity helps the AI locate and use the correct information, especially in lengthy discussions.

This approach is particularly important when working on iterative tasks, such as refining a document through multiple revisions. As the conversation progresses, it can become unclear which version of the document the AI should be referencing. In such cases, clearly indicate which version you’re referring to, or consider summarizing the current state before requesting further changes.

By being explicit in your references, you help the AI maintain consistency, reduce redundancy, and build upon previous points effectively. This practice ensures that the AI’s responses remain relevant and coherent throughout the conversation, even as the context grows more complex.

6. Edit Prompts In-Place

A powerful approach to refining your AI interactions is to edit your original prompts directly in the chat. Most interfaces allow you to modify your previous messages, creating a new branch in the conversation.

By editing and re-running your prompt in-place, you can guide the AI more precisely towards your desired outcome. This method offers several advantages:

Claude.ai in-place prompt edit

- Context Preservation: Editing in-place maintains the conversation’s overall structure while improving specific parts.

- Efficiency: It reduces the need for multiple back-and-forth exchanges to clarify your intent.

- Precision: You can fine-tune your instructions, questions, or context to elicit more accurate and relevant responses.

- Context Window Conservation: Unlike adding new messages, editing in-place doesn’t consume additional space in the limited context window, allowing for longer, more complex conversations.

- Learning Opportunity: By observing how slight changes in your prompt affect the AI’s output, you can develop more effective prompting strategies.

To maximize the benefits of this approach, craft clear, concise inputs by reviewing and editing your messages before sending. Focus on specificity, clarity, and relevance. This in-place editing technique combines the benefits of regeneration with refined input, streamlining your conversation with the AI and ultimately saving time while improving the quality of your interaction.

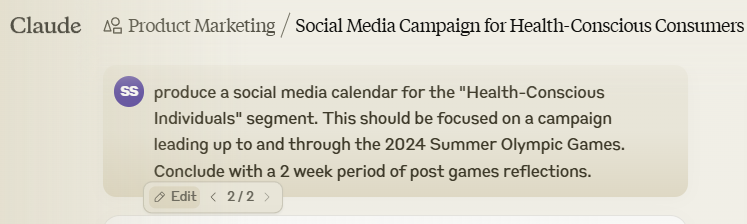

7. Regenerate and Branch Responses

AI responses involve an element of randomness, which leads to varied outputs for the same input. This variability can be significantly alter the trajectory of conversations, potentially leading to different outcomes - especially in creative or open-ended tasks.

If you’re unsatisfied with a response or simply want an alternative, use the “Regenerate” or “Retry” feature. This not only produces a new response, but also creates a branch in the conversation. You can then compare the generated responses and continue the chat with the one that you prefer..

ChatGPT Regenerate and Branch

Both ChatGPT and Claude offer this regeneration option for the AI’s last response. It’s particularly useful when the initial reply is off-topic, incorrect, or incomplete. If regenerating doesn’t yield satisfactory results, consider rephrasing your input or providing additional context.

You can initiate this process from any previous message in the conversation. This branching capability allows you to explore alternative paths and revisit earlier parts of the chat without losing the original thread or cluttering the context with irrelevant information.

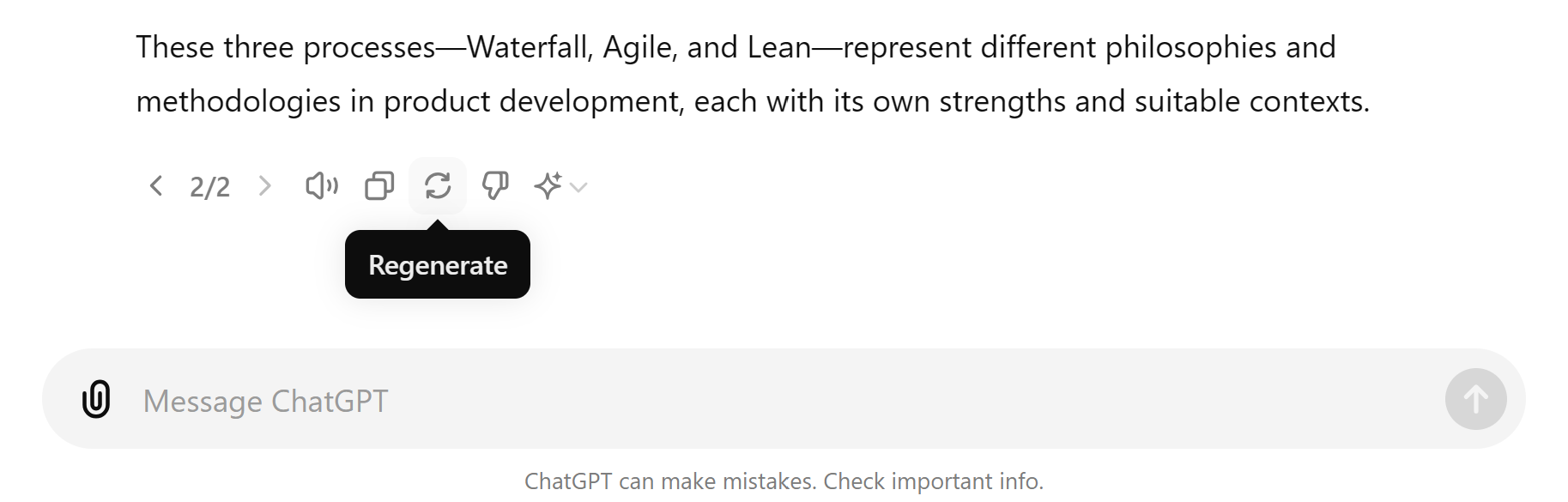

8. Reuse Chat Setups

When you’ve invested time in setting up a comprehensive context — such as providing product data sheets, defining market segments, or outlining launch processes — you can use this well-prepared chat session as a foundation for multiple related queries. This method, which we can call creating a “Branch Point”, offers several advantages:

- Consistency: Ensures all related tasks start from the same baseline of information.

- Time-saving: Eliminates the need to repeatedly provide the same background information.

- Context Maximization: Makes efficient use of the context window by retaining crucial information.

- Improved Accuracy: The AI has a richer, more stable context to work from, potentially leading to more accurate and relevant responses.

To create a Branch Point, once you have finished setting up your context, end your message with Respond with OK and standby for further instructions. This will respond with a short message that can be regenerated and used as a starting point for future discussions.

For example, consider a product launch scenario for a new smartphone:

%%{

init: {

'theme': 'base',

'themeVariables': {

'primaryColor': '#268bd2',

'primaryTextColor': '#fdf6e3',

'primaryBorderColor': '#073642',

'lineColor': '#657b83',

'secondaryColor': '#2aa198',

'tertiaryColor': '#eee8d5'

}

}

}%%

graph TD

A[Upload Product Datasheet] --> B[Create Customer Segments]

B --> C[Develop Personas]

C --> D[Branch Point]

D --> E["Develop Social

Media Marketing Plan"]

D --> F["Create Customer

Support Scripts"]

D --> G["Design In-Store

Display Guidelines"]

classDef highlight fill:#cb4b16,stroke:#073642,stroke-width:2px,color:#fdf6e3;

class D highlight;In this scenario, you would:

- Upload the product datasheet and work with the AI to create customer segments and personas.

- Once this foundation is established, create your Branch Point.

- From this point, you can explore multiple directions such as developing a social media marketing plan, creating customer support scripts, or designing in-store display guidelines.

This approach allows you to:

Using a Branch Point in claude.ai

- Preserve Context: All the foundational work remains fresh in the AI’s “memory”.

- Explore Multiple Directions: Start any of the branching tasks without others interfering or cluttering the conversation.

- Easily Backtrack: If you’re not satisfied with one direction, you can return to the Branch Point and start a new task without losing your foundational work.

By reusing your existing setup, you create a more efficient workflow, maintain consistency across related tasks, and make the most of the effort invested in establishing the initial context. This approach is particularly valuable for complex projects or ongoing work within a specific domain.

Both ChatGPT and Claude have more robust options that work with teams or more complicated context setups:

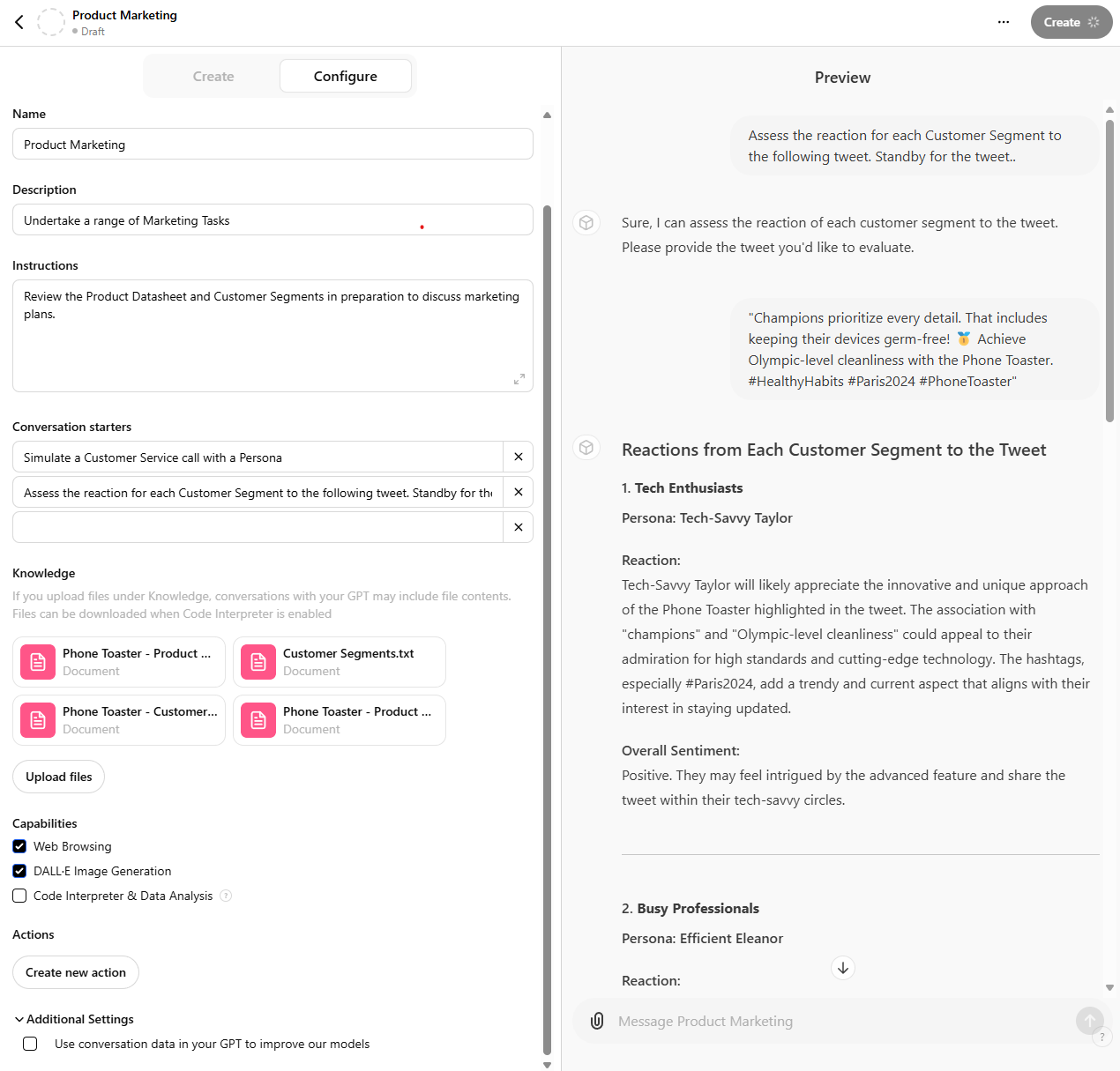

ChatGPT - Create a Custom GPT

If you are using ChatGPT Professional, then you can create a “Custom GPT” that will allow you to upload relevant files, set standard instructions and conversation starters. This efficiently lets you reuse and even share the setup with others. Be aware that the security options for sharing are limited unless you have a team plan.

ChatGPT Custom GPT for Product Marketing

Also note that if you are using a Personal Plus plan, by default your data will be used for Model Training. You can switch this off under “Additional Settings” (shown in the screenshot above).

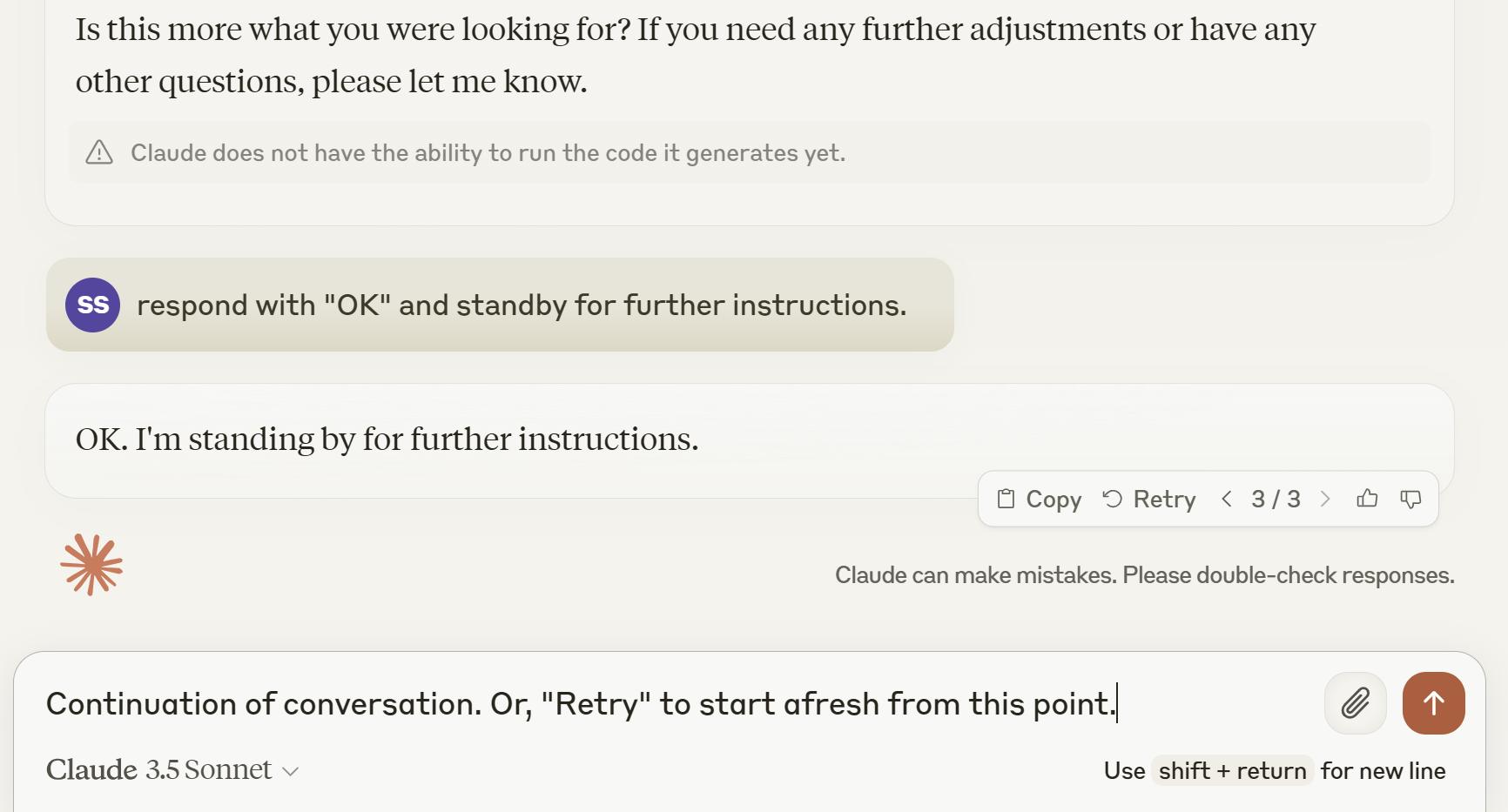

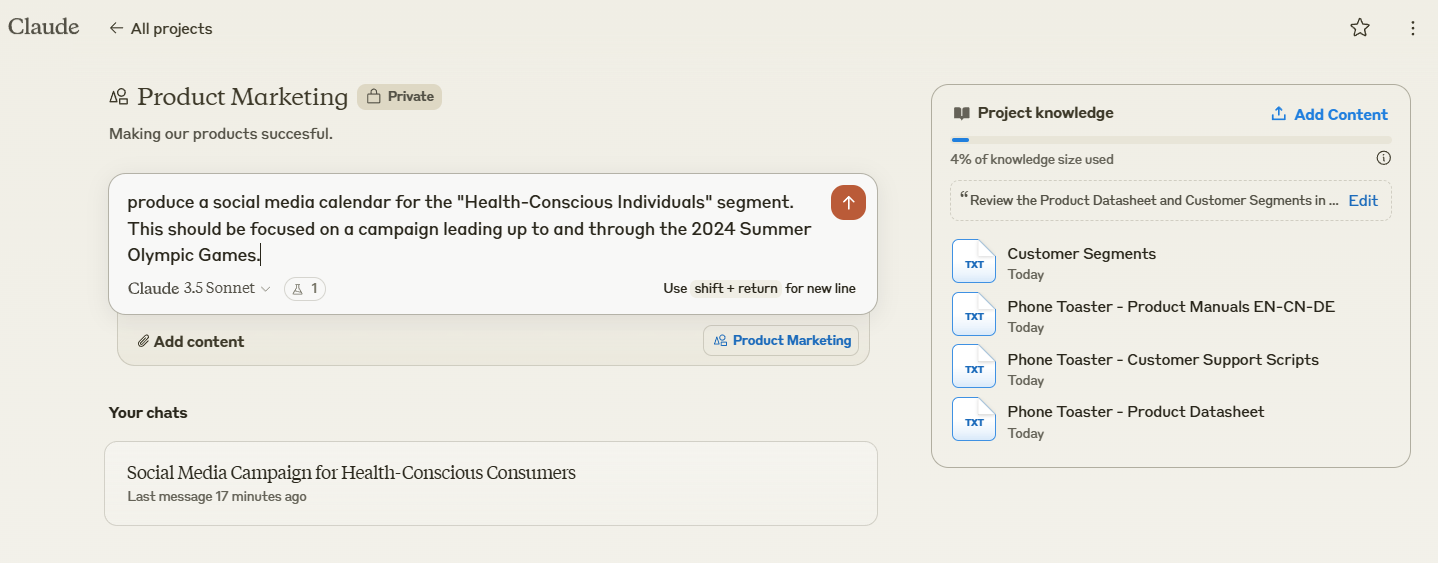

Claude.ai - Set up a Project

Claude.ai has a similar feature for Pro and Team plan users in Projects, which allows you to upload files, set custom instructions and share with a team.

Claude.ai Project for Product Marketing

9. Test and Leverage the AI’s Knowledge

AI models are trained on vast amounts of data up to their knowledge cut-off date3. To effectively use this knowledge:

-

Test the AI’s understanding of specific topics you plan to discuss. This helps identify any knowledge gaps or outdated information.

-

If you need detailed knowledge on a particular subject, ask the AI questions about it. This brings relevant information to the forefront of the conversation context.

-

When you want the AI to use a specific approach (e.g., a particular project management method), explicitly ask it to draw on that knowledge.

-

If the AI demonstrates gaps in knowledge crucial to your task, provide the necessary information yourself.

This approach ensures that the AI’s responses are based on accurate, relevant, and up-to-date information tailored to your needs.

10. Summarise and Start Afresh

When a conversation becomes lengthy or starts to lose focus, ask the AI to summarise the key points before starting a new chat. This technique allows you to capture the essential information from a long discussion and carry it forward efficiently.

Use a prompt like this to generate a comprehensive summary4:

Provide a comprehensive and detailed summary of our conversation so far, capturing:

1. The main context and background of our discussion

2. All recent and relevant points

3. Any actionable insights or decisions made

4. Key stakeholder considerations

Please ensure the summary:

- Is impersonal (exclude references to "you" or "I")

- Uses clear, separated paragraphs

- Employs bullet points where appropriate for clarity

- Synthesizes related ideas

- Is easy to read and understand

This summary will be used as a starting point for a new conversation, so please

ensure it provides a complete overview of our discussion.Benefits of this approach include:

- Consolidates key information from a long conversation into a concise format.

- Provides a clear starting point for a new chat session, maintaining continuity of thought.

- Helps identify any gaps or inconsistencies in the discussion so far.

- Allows you to refocus the conversation if it has drifted off-topic.

After receiving the summary, review it for accuracy and completeness. You can then use this summary as the initial context for a new chat session, allowing you to build on your progress while working within a fresh context window.

Summary

As conversations with AI models become longer and more complex, implementing these strategies can help maintain the quality of responses. While AIs generally perform well in short interactions with minimal management, employing these techniques becomes crucial as task complexity increases.

To further enhance your AI interactions, consider reviewing the prompt engineering guides provided by major AI platforms:

| Platform | Prompt Engineering Guide Link |

|---|---|

| OpenAI | Prompt engineering |

| Anthropic Claude | Prompt engineering overview |

| Meta Llama | Prompt Engineering |

These resources offer additional insights and techniques to refine your prompts and maximize the effectiveness of your AI interactions across different platforms. Also take a look at our previous article on Role Prompting for more ideas.

Footnotes

-

A fundamental part of the AI producing a response is how it uses its available “attention”. Attention is divided into layers, each having a number of “heads” that process different aspects of the input simultaneously. These heads allow the model to capture various relationships and patterns in the conversation from different perspectives. For example, some heads might focus on nearby words, while others might capture long-range dependencies or semantic relationships. This multi-head approach enables the AI to perform a rich, nuanced analysis of the conversation context, leading to more sophisticated understanding and generation of language. Small models like Llama 3.1 8B use 32 heads and 32 layers, whereas the largest model - Llama 3.1 405B uses 128 heads and 126 layers. Generally, more heads and layers allow the model to handle longer, more complex conversations and produce responses with greater depth and subtlety - at the expense of computational resources. This is an active area of research to make AIs more efficient. For example, modern models use a technique called GQA (Grouped Query Attention) to improve speed and memory usage. The recent “Llama 3 Herd of Models” paper provides a comprehensive overview of building a state-of-the-art model. ↩︎

-

ChatGPT allows configuration through the Custom Instructions feature. ChatGPT also has an automatic Memories Feature that may influence new chats. ↩︎

-

The exact sources and weighting of training data for most models is proprietary. To give an indication of specifics though, the original Meta Llama model launched in Feb 2023 had the below training data. The most recent model (Llama 3.1) launched just 15 months later has been trained on 10 times as much data, including multiple languages, audio and video.

↩︎Source Content Weighting Size English CommonCrawl English language web content Very High (73.7%) 3.3 TB C4 Cleaned web pages High (15.9%) 783 GB GitHub Open-source code Medium (2.9%) 328 GB Wikipedia Encyclopedia articles in 20 languages Medium (11.0%) 83 GB Books Project Gutenberg and Books3 collection Medium (10.0%) 85 GB ArXiv Scientific papers Low (2.7%) 92 GB Stack Exchange Q&A from various domains Low (2.1%) 78 GB -

This prompt was inspired by the “Compression” feature in Big-AGI. Refer to the source code for more ideas. ↩︎