Claude Projects - First Impressions

Introduction

Yesterday, Anthropic released a new “Projects” feature for their Claude.ai front-end. It allows users to work with multiple documents and set up large context conversations without the need for multiple uploads and extensive priming when starting a session.

Context Stuffing vs. RAG Snippets

When exploring content interaction through LLM front ends like Claude.ai, we often encounter two distinct methods:

-

Context Stuffing - This technique involves loading complete documents into the working memory of the LLM. This means that the conversation has access to all relevant details throughout the interaction.

-

RAG Snippets (Retrieval Augmented Generation) - In contrast, this method divides documents into smaller pieces and calculates embeddings for them - creating unique signatures that facilitate the identification of similar words or sentences during a conversation. These small, relevant parts of documents are then inserted into the context.

RAG Snippets are cost-efficient and useful when dealing with extremely large datasets. However, they have limitations in what the LLM ‘sees’ and carry the risk of the search missing, or select irrelevant information.

Context Stuffing is beneficial for complex content creation or review tasks as it allows comprehensive access to information. It is more resource-intensive as it uses more input tokens and requires careful management of the context to maintain coherence in responses.

Projects uses Context Stuffing, making it well-suited for complex content creation tasks where having a comprehensive view over a set of related artifacts is beneficial.

Workflow

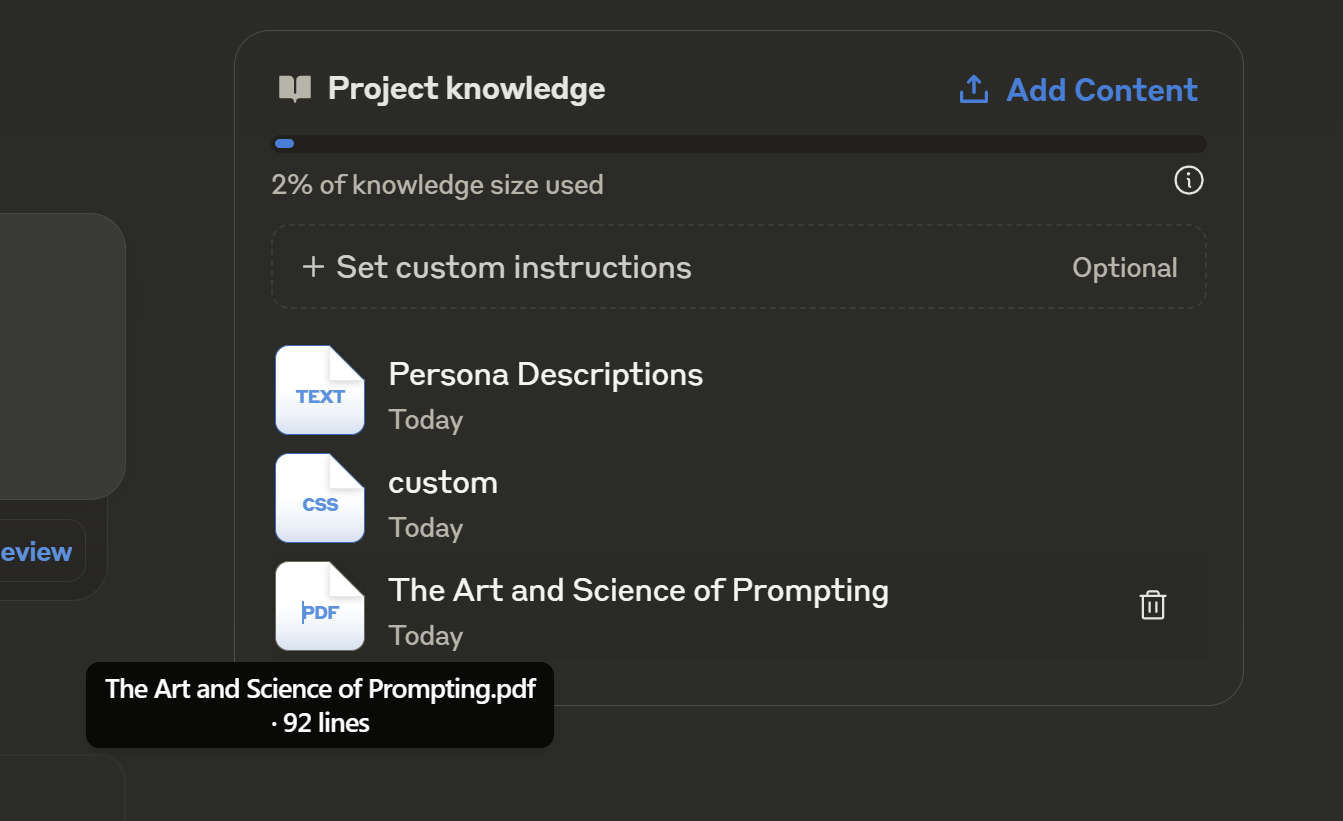

After creating a project, you can upload documents to a “Project Knowledge” store. It shows you how much “knowledge size” is being consumed as a percentage - which is helpful for making choices about what really needs to be in or out of a project.

You can also set a “Custom Instruction,” which is a convenient way of storing the “set-up” instructions for the chat.

Usage

Once set up, you simply start a chat, with your customised Context (Documents and Instructions) ready to work with. I’ve found a need to give it a small nudge to use the content that is present - but it then works exactly as expected.

Document uploads are limited to 30mb, and I did have some trouble with text extraction from a .PPTX. However, for a lot of use-cases text snippets are going to be more appropriate and context-friendly. There is a small front-end for adding snippets, but as of today they are not directly editable from the interface.

Projects simplifies the process of managing complex contexts and workflows, extending LLM use-cases to casual users, and available through the consumer front-end.

This is also going to be really helpful for multi-user teams, where particular defintions (e.g. Personas for Product Management) will be shared, and ensuring conversation set-up is consistent is key.

Projects - Thoughts and Future

Having tried Projects for a few small jobs so far, I’ve got the following thoughts:

- Context Stuffing is a relatively expensive approach but for most users will give the best results. It’s good to see Anthropic offering this feature, but I can’t help wondering if this is going to increase their overall per-user session cost significantly. Perhaps this feature will come at an additional charge sooner rather than later?

- The ability to easily toggle specific documents on/off when starting a chat. This could be helpful for quickly having a more targetted conversation with a narrower set of documents - especially useful if coherence issues come in to play (as well as potentially more cost-efficient).

- Round-tripping. Whilst able to discuss changes to documents with Claude, it can’t update or create new documents directly from the chat. This would be a hard feature to get correct, but could be extremely powerful. That said, it’s still very convenient to re-upload a changed document, and then start a new conversation with a fresh context.

- Document Store Integration. The ability to integrate with a GitHub repository, Google Docs or other Document Store would be welcome, and is surely on the roadmap.

- Chat Limitations. At the moment, regular Claude users will be familiar with the frustration of hitting their Chat rate limits. This could be a real challenge with Projects as interruptions to a Project Chat are likely more inconvenient than through the general Consumer front-end.

- Different Custom Instructions. Being able to pick from more than one Custom Instruction would be convenient - for example, if working on a website one could be catered to producing Content, another to focus on Styling, Layout and JavaScript adjustments.

Final words

Projects is a welcome addition to the fast-evolving LLM front-ends marketplace, and provides a simple practical tool that opens-up sophisticated workflows to more casual users. It’s good to see the aggressive use of the Context Window - as this will usually produce more better, more expected outcomes for less technically inclined users.